Chapter 5 Portfolio Theory: Mean-Variance Optimisation and the CAPM

In the previous chapters we focused on the introduction of risk and return properties for individual assets as well as portfolios. Further, we introduced the concept of co-dependence both between individual assets as well between an asset and its underlying market structure. Therein, we derived important notions on the statistical requirements to draw causal conclusions. Within these chapters, we focused exclusively on the relationship between risk and return. Naturally, one definition we made was that risk must be compensated for with an appropriate rate of return. Noted as risk parity hypothesis, we created measures that put both factors into relation to each other and compared these ratios throughout a number of asset classes. In this chapter, we now take the underlying model on asset returns, the ideas on risk and return characteristics of portfolios as well as the statistical properties introduced and combine them to form the basis of fempirical portfolio analysis frameworks. Specifically, we present the well-known Mean-Variance portfolio analysis concept for optimal asset allocations, first presented by Henry Markowitz (1959). This theory serves as fundament for most modern asset management classes. The framework uses concepts and assumptions we introduced in previous chapters. As such, it is assumed that the asset returns are normally distributed and investor preferences solely depend on risk and return characteristics. According to the risk parity hypothesis, additional risk must be compensated for by higher returns. Consequently, investors are favorable towards portfolios with high returns and unfavorable towards such with higher variances. As risk and return appear to be positively correlated, investors face a trade-off of these metrics between individual portfolios. Markowitz proposes a framework which quantifies this mean-variance trade off and creates a method to define the optimal portfolio choice.

Henceforth, in this chapter we provide the theoretical foundations as well as the practical application to the Markowitz portfolio optimisation framework. Therein, we first cover the case of two risky and one risk-free asset. We will use this setting to facilitate the main notions as well as replicate the major results and implications of Markowitz’s ideas, both graphically as well with forms of matrix algebra. Later on, we will generalise the simple model into a setting with multiple risky assets. Building on this, we introduce the concept of short sales contracts and, eventually, give rise to the notion of portfolio risk budgeting.

5.1 Markowitz Portfolio Theory with two Risky Assets

5.1.1 The case of portfolios

In chapter two, we already discussed the concept of risk and return. Therein, we defined what a portfolio return is, how we can calculate different forms of returns, what co-dependence properties are and how we can quantify them in order to calculate the appropriate amount of risk for a portfolio. These definitions led us to the concept of portfolio risk and return relationships, where we defined the following:

\[ \begin{align*} \mu_P = E[R_P] &= x_AR_A + x_BR_B \\ \sigma_P^2 &= x_A^2\sigma_A^2 + x_B^2\sigma_B^2 + 2x_Ax_B\sigma_{AB}\\ \sigma_{AB} &= \rho_{AB}\sigma_A\sigma_B \\ x_A + x_B &= 1 \\ R_P &\sim N(\mu_P, \sigma_P^2) \end{align*} \]

where we derived each concept in the previous chapters. Regarding the co-dependence property, we stated that a non perfectly correlated portfolio will reduce overall portfolio risk, due to the concept of diversification. Thus finding assets with non-perfectly positively correlated returns can be beneficial when forming portfolios because risk, as measured by portfolio standard deviation, can be reduced. We also derived this property in the linear algebra introduction. This concepts proves that a risk reduction effect is evident in long-only, two and multiple asset cases when forming portfolios, with the exact amount of reduction quantified by the correlation coefficient, \(\rho_{AB}\).

5.1.2 The set of attainable portfolios

Looking at portfolios, we also introduced the concept of weights. We stated that, in order to create portfolios, we need to define which assets receive which weights and how this weight distribution, or allocation, can influence both risk and return characteristics. Naturally, putting more weight on assets with higher volatility and higher returns will increase both the expected return as well as the risk of a portfolio. Throughout, we specified two weighting concepts: Equally-Weighted as well as Value-Weighted portfolios, whereas the former uses a 1/N weighting ratio, while the latter uses the inverse of the market capitalisation ratio to define weights of individual assets.

However, in theory, we could use any weighting scheme we want in order to form portfolio, as long as the sum of all weights add up to one. This brings us to the next theory. The set of all attainable, or feasible, portfolios is defined as all portfolios that can be created by changing the portfolio weights of the individual assets, as long as their sum adds up to one.

This theorem creates the well-known, parabola shaped relationship of risk and return for a portfolio when varying the weights of its components. Without going too deep into the mathematics, let’s visualize this relationship for the case of a portfolio consisting of two assets.

# First define some return and risk characteristics

mu_A = 0.149

sigma_A = 0.265

mu_B = 0.067

sigma_B = 0.167

rho_AB = -0.135

sigma_AB = sigma_B*sigma_A*rho_AB

# Then, we also define a sequence of 30 portfolio weights for A and B

x_A = seq(from=-0.8, to=2.2, by=0.1)

x_B = 1 - x_A

# Create the expected return as well as the variance and standard deviation of each portfolios

mu_AB = x_A*mu_A + x_B*mu_B

var_AB = x_A^2*sigma_A^2 + x_B^2*sigma_B^2 + 2*x_A*x_B*sigma_AB

sd_AB = sqrt(var_AB)

# Create a data frame for the relationship

risk_return_df <- as.data.frame(cbind(mu_AB, sd_AB, x_A, x_B))

colnames(risk_return_df) <- c("Portfolio_Return", "Portfolio_Risk", "Weight_A", "Weight_B")

# Now, let's visualise the relationship

risk_return_df %>%

ggplot(aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point() +

geom_point(data = subset(risk_return_df, Weight_A >= 0 & Weight_B >= 0), color = "goldenrod", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(risk_return_df, Portfolio_Risk == min(Portfolio_Risk)), color = "dodgerblue3", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(risk_return_df, Weight_A == 1), color = "springgreen4", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(risk_return_df, Weight_B == 1), color = "darkorchid1", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

annotate('text',x = 0.22 ,y = 0.16, label = paste('PF of Asset A only'), size = 3, color = "springgreen4") +

annotate('text',x = 0.22 ,y = 0.07, label = paste('PF of Asset B only'), size = 3, color = "darkorchid1") +

ylab(expression(mu[p])) + xlab(expression(sigma[p])) + ggtitle("Portfolio Frontier for 30 PFs formed with differing weights of two assets") +

labs(color='Factor Portfolios') +

theme(plot.title= element_text(size=14, color="grey26",

hjust=0.3,lineheight=2.4, margin=margin(15,0,15,0)),

panel.background = element_rect(fill="#f7f7f7"),

panel.grid.major.y = element_line(size = 0.5, linetype = "solid", color = "grey"),

panel.grid.minor = element_blank(),

panel.grid.major.x = element_blank(),

plot.background = element_rect(fill="#f7f7f7", color = "#f7f7f7"),

axis.title.y = element_text(color="grey26", size=12, margin=margin(0,10,0,10)),

axis.title.x = element_text(color="grey26", size=12, margin=margin(10,0,10,0)),

axis.line = element_line(color = "grey"))

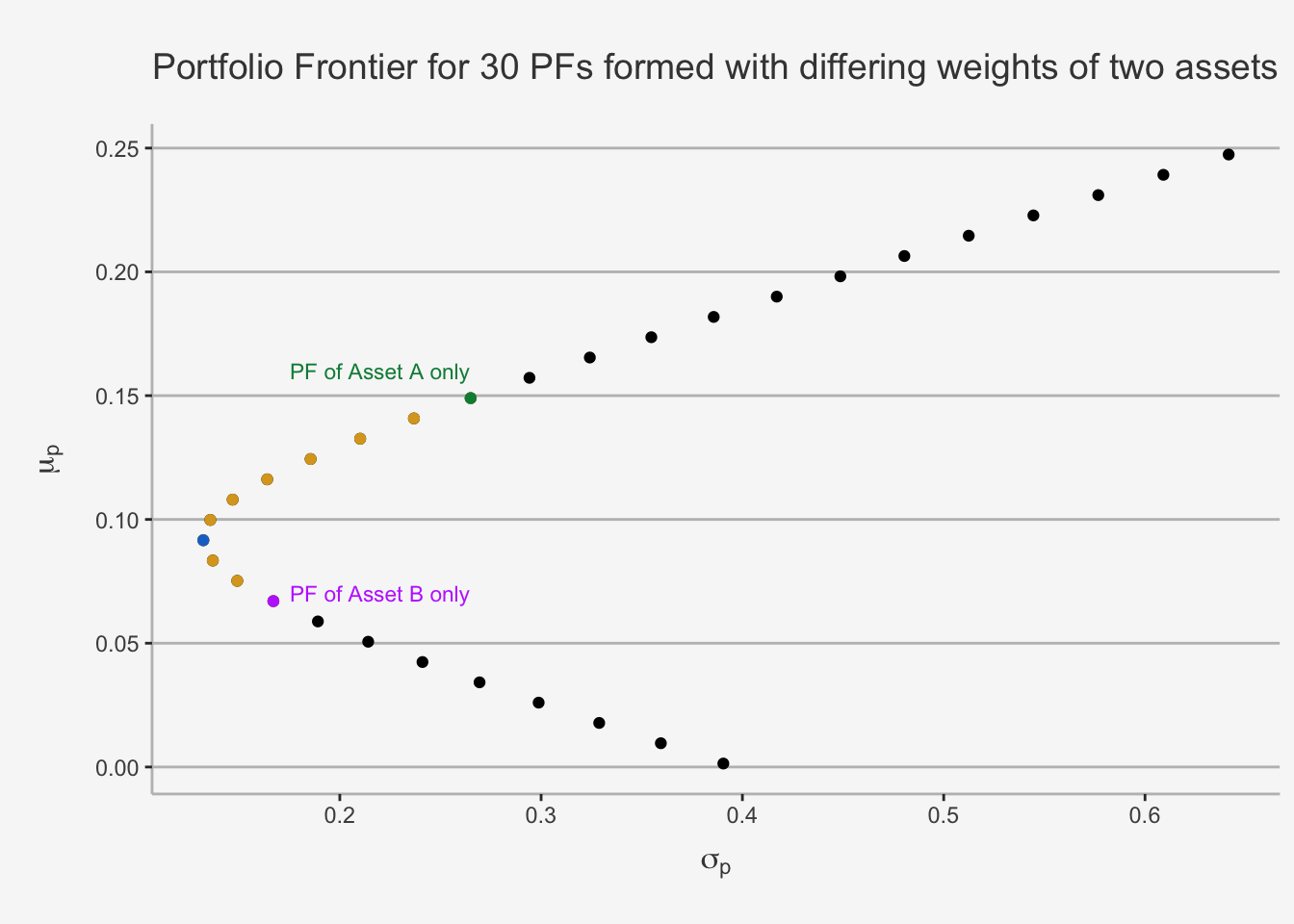

This plot is referred to as Markowitz Bullet. The black dots represent 30 distinct risk and return combinations, created by varying the individual constituent weights. The golden, purple, green and blue dots represent long-only portfolios. The black dots represent long-short strategies of two assets. Further, the purple and the green dot represent portfolios consisting of only the Asset B and A, respectively. Moreover, the blue dot represents the global minimum variance portfolio, a notion which we will cover in more detail later.

This shape is vital for the portfolio management theory as it displays the concept of diversification quite clearly. The idea that we just visualised behind the diversification potential can be understood as follows. Suppose we first have an investment in Asset B only. We can diversify and improve our portfolio metrics by rebalancing the portfolio such that we now include some of Asset A. This is indicated by th golden dots. As we can see, diversification increases the expected return while simultaneously decreasing the risk associated. Consequently, these portfolios are superior to the B only case. This is achieved until we reach the blue dot, which displays the minimum variance of a portfolio. This is also known as minimum-variance portfolio. Following this portfolio, we see that both risk and return increase monotonically. Consequently, the “optimal” portfolio in these cases depend on the investor preferences. We then follow a long-only strategy until we reach the green dot, which only consists of portfolio A. Afterwards, we follow with a long-A and short-B strategy.

5.1.3 The Minimum-Variance Portfolio

The Minimum-Variance (MV) Portfolio is a key concept in Markowitz’s Portfolio optimisation. It is used to give you an intuition on how to form weights on the portfolio frontier. It can be derived using a constrained optimisation problem.

We make a small exercise in calculus, using the substitution method, to derive the weight of the MV portfolio. As a side note, you can also use the Lagrangian method to determine the weights.

To get the MV portfolio, we use the minimisation of the portfolio variance, subject to the fact that both weights must add up to 1.

\[ \begin{align} \min_{x_A, x_B} \sigma_P^2 &= x_A\sigma_A^2 + x_B\sigma_B^2 + 2x_Ax_B\sigma_{AB} \\ \text{s.t. } x_a + x_B &= 1 \end{align} \] Let’s start with the optimisation. If we substitute \(x_B = 1 - x_A\), we obtain

\[ \begin{align} \min_{x_A} \sigma_P^2 &= x_A\sigma_A^2 + x_B\sigma_B^2 + 2x_Ax_B\sigma_{AB} \\ &= x_A^2\sigma_A^2 +(1-x_A)^2\sigma_B^2 + 2x_A(1-x_A)\sigma_{AB} && x_B = 1-x_A \\ \frac{d\sigma_P^2}{dx_A} = 0 &= 2x_A\sigma_A^2 - 2(1-x_A)\sigma_B^2 + 2\sigma_{AB} -4x_A\sigma_{AB} \\ &= 2x_A\sigma_A^2 - 2(1-x_A)\sigma_B^2 + 2\sigma_{AB}(1-x_A) \end{align} \]

If we now solve for \(x_A\), we obtain the optimal weight of Asset A and Asset B for the MV portfolio as:

\[ \begin{align} x_A &= \frac{\sigma_B^2 - \sigma_{AB}}{\sigma_A^2 + \sigma_B^2 - 2\sigma_{AB}}\\ x_B &= 1 - x_A \end{align} \]

In the case of our assets, let’s quickly calculate the respective weights:

x_A_MV = (sigma_B^2 - sigma_AB)/(sigma_A^2 + sigma_B^2 - 2*sigma_AB)

x_B_MV = 1 - x_A_MV

mu_MV = mu_A*x_A_MV + mu_B*x_B_MV

sd_MV = sqrt(sigma_A^2*x_A_MV^2 + sigma_B^2*x_B_MV^2 + 2*x_A_MV*x_B_MV*sigma_AB)

MV_df <- as.data.frame(cbind(x_A_MV, x_B_MV, mu_MV, sd_MV))

colnames(MV_df) <- c("Weight Asset A", "Weight Asset B", "Expected Return", "Volatility (StD)")

MV_df## Weight Asset A Weight Asset B Expected Return Volatility (StD)

## 1 0.3076735 0.6923265 0.09222923 0.13217465.1.4 The role of correlation on the frontier of portfolios

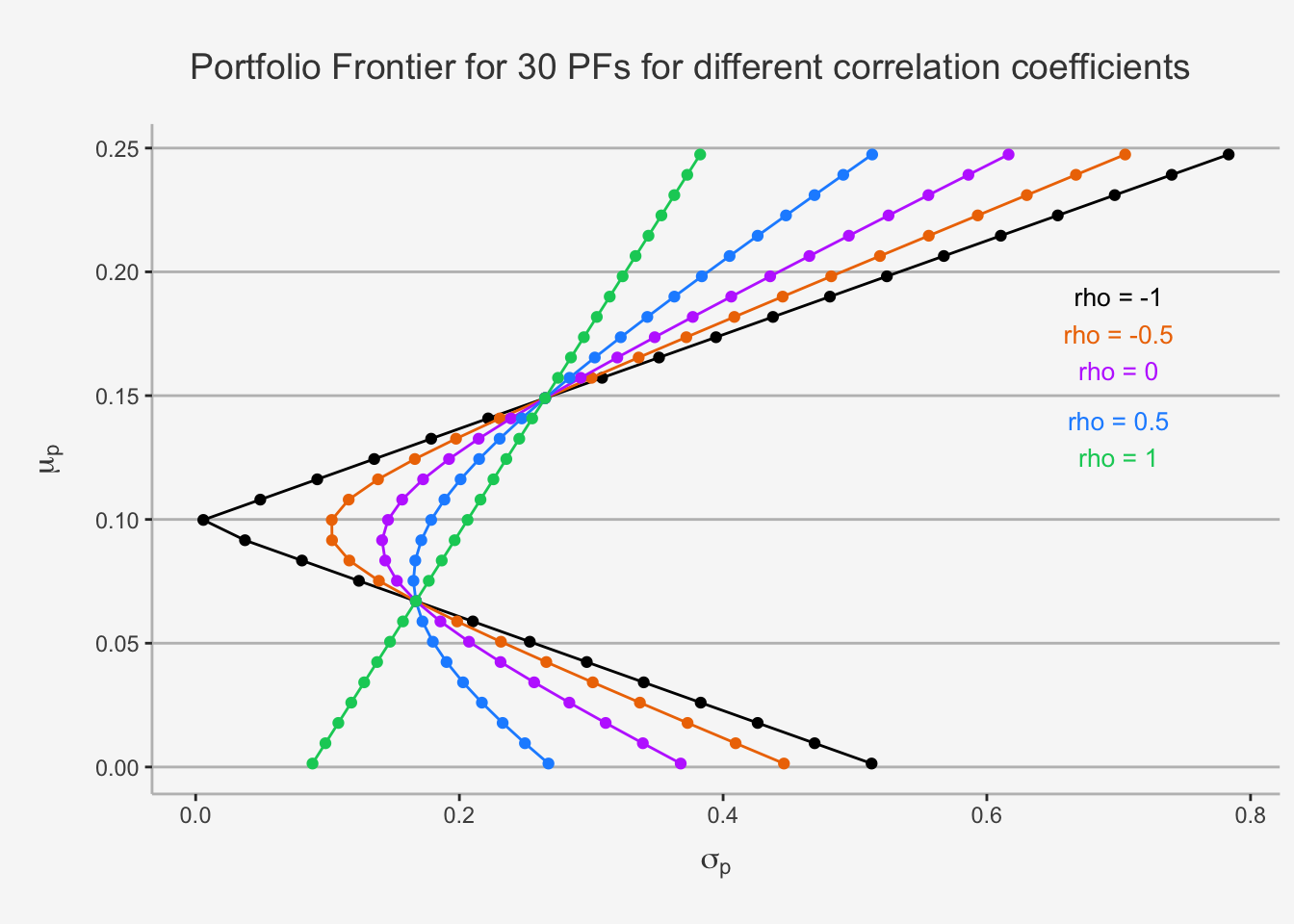

As we said, the portfolio frontier shows the correlation properties between assets and can therein display the diversification potential. As such, it is interesting to see how the portfolio changes with varying correlations. In order to show this, we need to slightly adjust the formula for the variance such that we are able to write the formula in terms of the correlation coefficient, \(\rho_{AB}\).

# Let's define the variance as follows:

# First define some return and risk characteristics

mu_A = 0.149

sigma_A = 0.265

mu_B = 0.067

sigma_B = 0.167

# Then, we also define a sequence of 30 portfolio weights for A and B

x_A = seq(from=-0.8, to=2.2, by=0.1)

x_B = 1 - x_A

# Calculate the mean return (the same for all as it does not depend on the rho's)

mu_AB = x_A*mu_A + x_B*mu_B

# Define a list with rho's

rho <- c(-1, -0.5, 0, 0.5, 1)

# Create the different standard deviations

for (i in rho){

cov_AB = sigma_B*sigma_A*i

var_AB = x_A^2*sigma_A^2 + x_B^2*sigma_B^2 + 2*x_A*x_B*cov_AB

sd_AB = sqrt(var_AB)

if (i == -1){

sd_AB_final <- sd_AB

}

else {

sd_AB_final <- cbind(sd_AB_final, sd_AB)

}

}

sd_AB_final_df <- as.data.frame(cbind(mu_AB, sd_AB_final))

colnames(sd_AB_final_df) <- c("Return", "rho_1_neg", "rho_0.5_neg", "rho0", "rho0.5", "rho1")

# Visualise the relationship

sd_AB_final_df %>%

ggplot(aes(x= rho_1_neg, y = Return)) +

geom_point() +

geom_path() +

geom_point(color = "darkorange2", aes(x= rho_0.5_neg, y = Return)) +

geom_path(color = "darkorange2", aes(x= rho_0.5_neg, y = Return)) +

geom_point(color = "darkorchid1", aes(x= rho0, y = Return)) +

geom_path(color = "darkorchid1", aes(x= rho0, y = Return)) +

geom_point(color = "dodgerblue1", aes(x= rho0.5, y = Return)) +

geom_path(color = "dodgerblue1", aes(x= rho0.5, y = Return)) +

geom_point(color = "springgreen3", aes(x= rho1, y = Return)) +

geom_path(color = "springgreen3", aes(x= rho1, y = Return)) +

annotate('text',x = 0.70 ,y = 0.19, label = paste('rho = -1'), size = 3.5) +

annotate('text',x = 0.70 ,y = 0.175, label = paste('rho = -0.5'), size = 3.5, color = "darkorange2") +

annotate('text',x = 0.70 ,y = 0.16, label = paste('rho = 0'), size = 3.5, color = "darkorchid1") +

annotate('text',x = 0.70 ,y = 0.14, label = paste('rho = 0.5'), size = 3.5, color = "dodgerblue1") +

annotate('text',x = 0.70 ,y = 0.125, label = paste('rho = 1'), size = 3.5, color = "springgreen3") +

ylab(expression(mu[p])) + xlab(expression(sigma[p])) + ggtitle("Portfolio Frontier for 30 PFs for different correlation coefficients") +

labs(color='Factor Portfolios') +

theme(plot.title= element_text(size=14, color="grey26",

hjust=0.3,lineheight=2.4, margin=margin(15,0,15,0)),

panel.background = element_rect(fill="#f7f7f7"),

panel.grid.major.y = element_line(size = 0.5, linetype = "solid", color = "grey"),

panel.grid.minor = element_blank(),

panel.grid.major.x = element_blank(),

plot.background = element_rect(fill="#f7f7f7", color = "#f7f7f7"),

axis.title.y = element_text(color="grey26", size=12, margin=margin(0,10,0,10)),

axis.title.x = element_text(color="grey26", size=12, margin=margin(10,0,10,0)),

axis.line = element_line(color = "grey"))

5.1.5 Efficient Portfolios with two risky assets

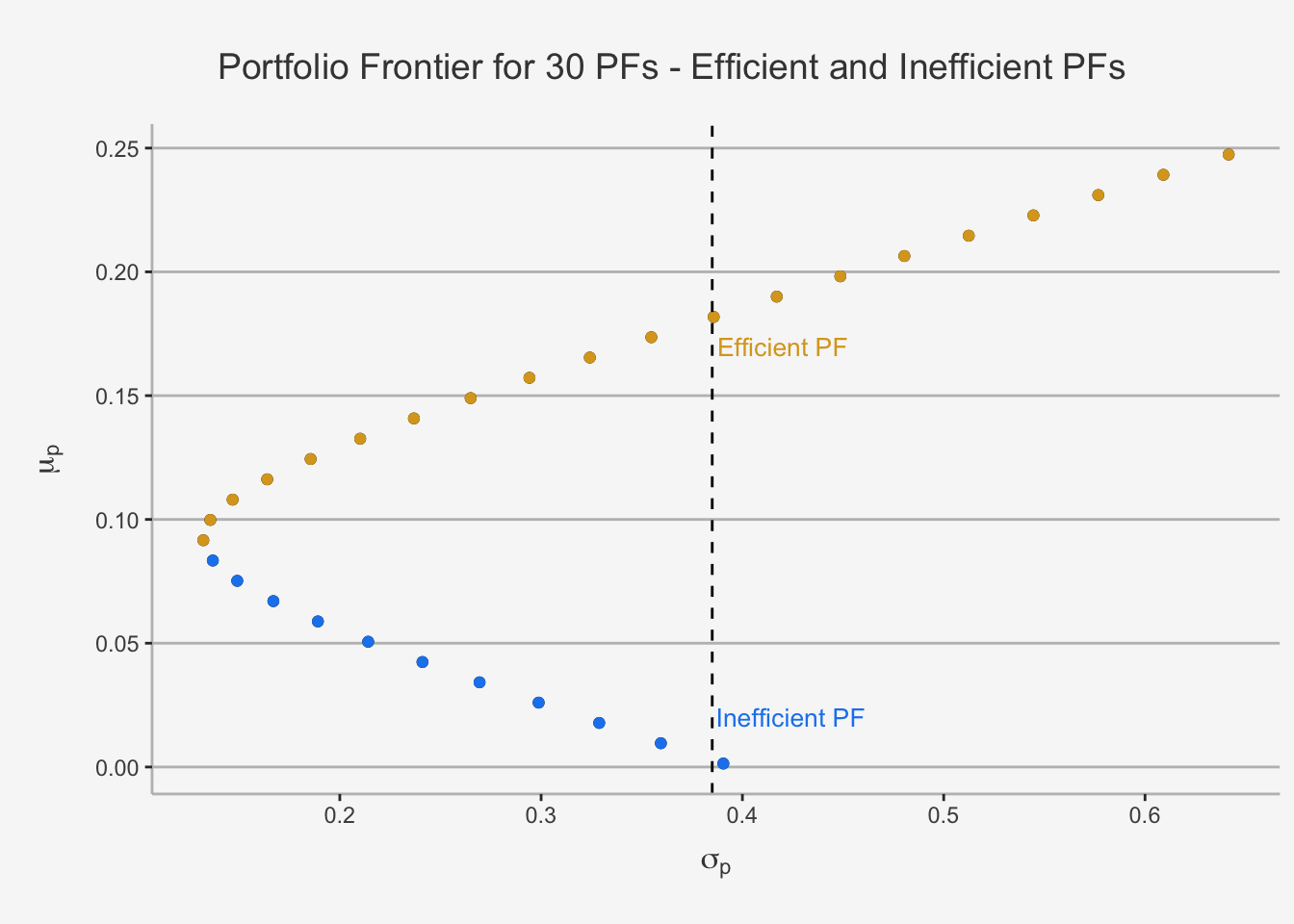

As we have seen, the bullet shape of each portfolio combination splits the portfolio set horizontally into two regions, based on their respective risk and return relation. To be precise, we can observe that, for a quasi identical level of risk we can attain two vastly different levels of expected portfolio return. Based on this observation, we can sort the portfolio into two regions: Efficient and Inefficient portfolios.

Efficient Portfolios are the set of all attainable portfolios that have the highest return for any given level of risk (measured by its standard deviation). Graphically, these are all the portfolios that are at or above the MV portfolio.

Inefficient Portfolios are the set of all attainable portfolios that do not have the highest return for any given level of risk (measured by its standard deviation). Graphically, these are all the portfolios that are at or below the MV portfolio.

We can visually depict this as follows:

# First define some return and risk characteristics

mu_A = 0.149

sigma_A = 0.265

mu_B = 0.067

sigma_B = 0.167

rho_AB = -0.135

sigma_AB = sigma_B*sigma_A*rho_AB

# Then, we also define a sequence of 30 portfolio weights for A and B

x_A = seq(from=-0.8, to=2.2, by=0.1)

x_B = 1 - x_A

# Create the expected return as well as the variance and standard deviation of each portfolios

mu_AB = x_A*mu_A + x_B*mu_B

var_AB = x_A^2*sigma_A^2 + x_B^2*sigma_B^2 + 2*x_A*x_B*sigma_AB

sd_AB = sqrt(var_AB)

# Create a data frame for the relationship

risk_return_df <- as.data.frame(cbind(mu_AB, sd_AB, x_A, x_B))

colnames(risk_return_df) <- c("Portfolio_Return", "Portfolio_Risk", "Weight_A", "Weight_B")

# Now, let's visualise the relationship

risk_return_df %>%

ggplot(aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point() +

geom_point(data = subset(risk_return_df, Portfolio_Return >= 0.0916), color = "goldenrod", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(risk_return_df, Portfolio_Return < 0.0916), color = "dodgerblue2", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

ylab(expression(mu[p])) + xlab(expression(sigma[p])) + ggtitle("Portfolio Frontier for 30 PFs - Efficient and Inefficient PFs") +

geom_vline(xintercept = 0.385, linetype = "dashed") +

annotate('text',x = 0.42 ,y = 0.17, label = paste('Efficient PF'), size = 3.5, color = "goldenrod") +

annotate('text',x = 0.424 ,y = 0.02, label = paste('Inefficient PF'), size = 3.5, color = "dodgerblue2") +

labs(color='Factor Portfolios') +

theme(plot.title= element_text(size=14, color="grey26",

hjust=0.3,lineheight=2.4, margin=margin(15,0,15,0)),

panel.background = element_rect(fill="#f7f7f7"),

panel.grid.major.y = element_line(size = 0.5, linetype = "solid", color = "grey"),

panel.grid.minor = element_blank(),

panel.grid.major.x = element_blank(),

plot.background = element_rect(fill="#f7f7f7", color = "#f7f7f7"),

axis.title.y = element_text(color="grey26", size=12, margin=margin(0,10,0,10)),

axis.title.x = element_text(color="grey26", size=12, margin=margin(10,0,10,0)),

axis.line = element_line(color = "grey"))

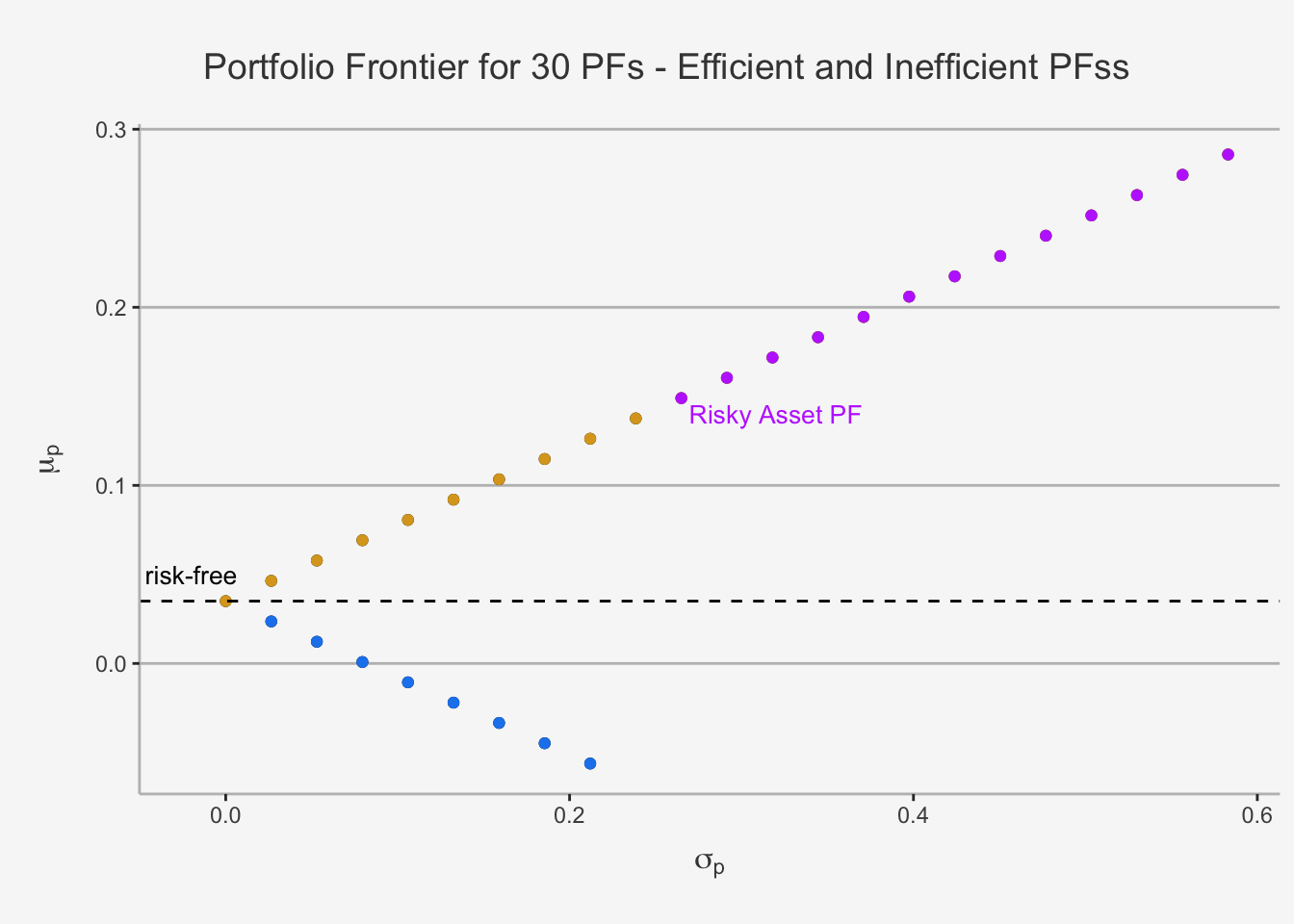

5.2 Markowitz Portfolio Theory with a Risky and a Risk-Free Asset

In the previous chapters we also introduced the concept of a risk-free asset. In essence, risk-free assets are such assets that always have the same pay-off, irrespective of the state of the world. Thus, their volatility structure is constant. As a consequence, they have a quasi-zero variance and standard deviation. Prominent examples of risk-free assets are the Swiss 1 Year Government Bond with which we have worked throughout the class or the US T-Bills.

These notions imply the following properties of risk free assets, \(r_f\):

\[ \begin{align} E[r_f] &= r_f \\ \sigma_f^2 &= 0 \\ cov(r_A, r_f) &= 0 \end{align} \]

If we introduce the risk-free asset, into the portfolio theory, our portfolio return becomes:

\[ R_P = x_fr_f + x_Ar_A = (1-x_A)r_f + x_Ar_A = r_f + x_A(r_A - r_f) \]

whereas \(x_A(r_A - r_f)\) is the weighted excess return of the risky asset over the risk-free asset. Consistent with the risk parity hypothesis, we expect this excess return to be positive, as rational investors expect a higher return when incorporating riskier assets to compensate for said increase in risk.

In said case, we can calculate both the portfolio expected return as well as the variance as:

\[ \begin{align} \mu_P &= r_f + x_A(\mu_A - r_f) \\ \sigma_P &= x_A\sigma_A \end{align} \] In case of a risky and a risk-free asset, we can draw the portfolio frontier in a similar fashion to the frontier assuming that we have two perfectly negatively correlated assets:

# First define some return and risk characteristics

mu_A = 0.149

sigma_A = 0.265

mu_f = 0.035

sigma_f = 0

rho_Af = 0

sigma_Af = 0

# Then, we also define a sequence of 30 portfolio weights for A and B

x_A = seq(from=-0.8, to=2.2, by=0.1)

x_f = 1 - x_A

# Create the expected return as well as the variance and standard deviation of each portfolios

mu_Af = x_A*mu_A + x_f*mu_f

var_Af = x_A^2*sigma_A^2 + x_f^2*sigma_f^2 + 2*x_A*x_f*sigma_Af

sd_Af = sqrt(var_Af)

# Create a data frame for the relationship

risk_return_df_rf <- as.data.frame(cbind(mu_Af, sd_Af, x_A, x_f))

colnames(risk_return_df_rf) <- c("Portfolio_Return", "Portfolio_Risk", "Weight_A", "Weight_B")

# Now, let's visualise the relationship

risk_return_df_rf %>%

ggplot(aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point() +

geom_point(data = subset(risk_return_df_rf, Portfolio_Return >= 0.0350), color = "goldenrod", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(risk_return_df_rf, Portfolio_Return < 0.0350), color = "dodgerblue2", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(risk_return_df_rf, Weight_A >= 1), color = "darkorchid1", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

annotate('text',x = -0.02 ,y = 0.05, label = "risk-free", size = 3.5, color = "black") +

annotate('text', x = 0.32 ,y = 0.14, label = paste('Risky Asset PF'), size = 3.5, color = "darkorchid1") +

ylab(expression(mu[p])) + xlab(expression(sigma[p])) + ggtitle("Portfolio Frontier for 30 PFs - Efficient and Inefficient PFss") +

geom_hline(yintercept = 0.0350, linetype = "dashed") +

labs(color='Factor Portfolios') +

theme(plot.title= element_text(size=14, color="grey26",

hjust=0.3,lineheight=2.4, margin=margin(15,0,15,0)),

panel.background = element_rect(fill="#f7f7f7"),

panel.grid.major.y = element_line(size = 0.5, linetype = "solid", color = "grey"),

panel.grid.minor = element_blank(),

panel.grid.major.x = element_blank(),

plot.background = element_rect(fill="#f7f7f7", color = "#f7f7f7"),

axis.title.y = element_text(color="grey26", size=12, margin=margin(0,10,0,10)),

axis.title.x = element_text(color="grey26", size=12, margin=margin(10,0,10,0)),

axis.line = element_line(color = "grey"))

5.2.1 The Capital Allocation Line (CAL)

As we can see, we again obtain two straight lines, consisting of inefficient and efficient portfolios.

Under the assumption that \(x > 0\) (no short sales) we can use the variance equation and solve for x:

\[ x_A = \frac{\sigma_P} {\sigma_A} \]

This implies that the optimal weight of the risky asset is given by the variance of the asset relative to the variance of the portfolio. As such, the return of the risky asset is weighted by the inverse of the asset risk relative to the overall portfolio risk. If we now substitute this result into the expected return, we obtain:

\[ \mu_P = r_f + \frac{\mu_A - r_f}{\sigma_A}\cdot \sigma_P \]

This variable is called the Capital Allocation Line (CAL). This is exactly the line that consists of the dots we just displayed. It describes how the investment is allocated between the risk-free asset and the risky asset. For dots in close proximity to the risk-free asset, we understand that most of the capital is allocated in riskless assets (consequently, the return is close to the risk-free). The further we deviate from the risk-free asset, the more we allocate into the risky assets, with the (theoretical) maximum of a portfolio consisting only of the risky asset. The purple dots represent long-short portfolios where the risk-free asset is shorted to invest in additional quantities of the risky asset.

The slope of the CAL is familiar to us. If we take the derivative w.r.t \(\sigma_P\), we obtain:

\[ \frac{d\mu_P}{d\sigma_P} = \frac{\mu_A - r_f}{\sigma_A} \]

Note from the previous chapters that it puts an excess return in relation to the risk. This is also known as Sharpe Ratio, which measures the risk premium per additional unit of risk. The Sharpe ratio ultimately quantifies the efficiency of different risky and risk-free portfolio combinations. In essence, we understand that the portfolio with the higher Sharpe Ratio is more efficient than the portfolio with the lower ratio. Consequently, these portfolio variations according to weight assignments will induce a steeper slope. This is the reason for the appeal of the Sharpe Ratio in investment assessments.

# First define some return and risk characteristics

mu_A = 0.149

sigma_A = 0.265

mu_B = 0.074

mu_B = 0.067

sigma_B = 0.167

mu_f = 0.035

sigma_f = 0

rho_Af = 0

sigma_Af = 0

rho_Bf = 0

sigma_Bf = 0

# Then, we also define a sequence of 30 portfolio weights for A and B

x_A = seq(from=-0.8, to=2.2, by=0.1)

x_f = 1 - x_A

# Create the expected return as well as the variance and standard deviation of each portfolios

mu_Af = x_A*mu_A + x_f*mu_f

var_Af = x_A^2*sigma_A^2 + x_f^2*sigma_f^2 + 2*x_A*x_f*sigma_Af

sd_Af = sqrt(var_Af)

# Do the same for PF B

x_B = seq(from=-0.8, to=2.2, by=0.1)

x_f = 1 - x_B

mu_Bf = x_B*mu_B + x_f*mu_f

var_Bf = x_B^2*sigma_B^2 + x_f^2*sigma_f^2 + 2*x_B*x_f*sigma_Bf

sd_Bf = sqrt(var_Bf)

# Create a data frame for the relationship

risk_return_df_rf <- as.data.frame(cbind(mu_Af, sd_Af, mu_Bf, sd_Bf, x_A, x_f))

colnames(risk_return_df_rf) <- c("Portfolio_Return_A", "Portfolio_Risk_A", "Portfolio_Return_B", "Portfolio_Risk_B", "Weight_A", "Weight_B")

# Subset with only efficient PFs

risk_return_df_rf_sub <- subset(risk_return_df_rf, Weight_A >= 0)

# Now, let's visualise the relationship

risk_return_df_rf_sub %>%

ggplot(aes(x= Portfolio_Risk_A, y = Portfolio_Return_A)) +

geom_point(color = "goldenrod",) +

geom_point(color = "dodgerblue2", aes(x= Portfolio_Risk_B, y = Portfolio_Return_B)) +

annotate('text',x = 0.35 ,y = 0.16, label = "Portfolio A CAL", size = 3.5, color = "goldenrod") +

annotate('text',x = 0.25 ,y = 0.065, label = "Portfolio B CAL", size = 3.5, color = "dodgerblue2") +

annotate('text',x = -0.02 ,y = 0.05, label = "risk-free", size = 3.5, color = "black") +

ylab(expression(mu[p])) + xlab(expression(sigma[p])) + ggtitle("Portfolio Frontier for 30 PFs - Comparison of risk-free and risky portfolios") +

labs(color='Factor Portfolios') +

theme(plot.title= element_text(size=14, color="grey26",

hjust=0.3,lineheight=2.4, margin=margin(15,0,15,0)),

panel.background = element_rect(fill="#f7f7f7"),

panel.grid.major.y = element_line(size = 0.5, linetype = "solid", color = "grey"),

panel.grid.minor = element_blank(),

panel.grid.major.x = element_blank(),

plot.background = element_rect(fill="#f7f7f7", color = "#f7f7f7"),

axis.title.y = element_text(color="grey26", size=12, margin=margin(0,10,0,10)),

axis.title.x = element_text(color="grey26", size=12, margin=margin(10,0,10,0)),

axis.line = element_line(color = "grey"))

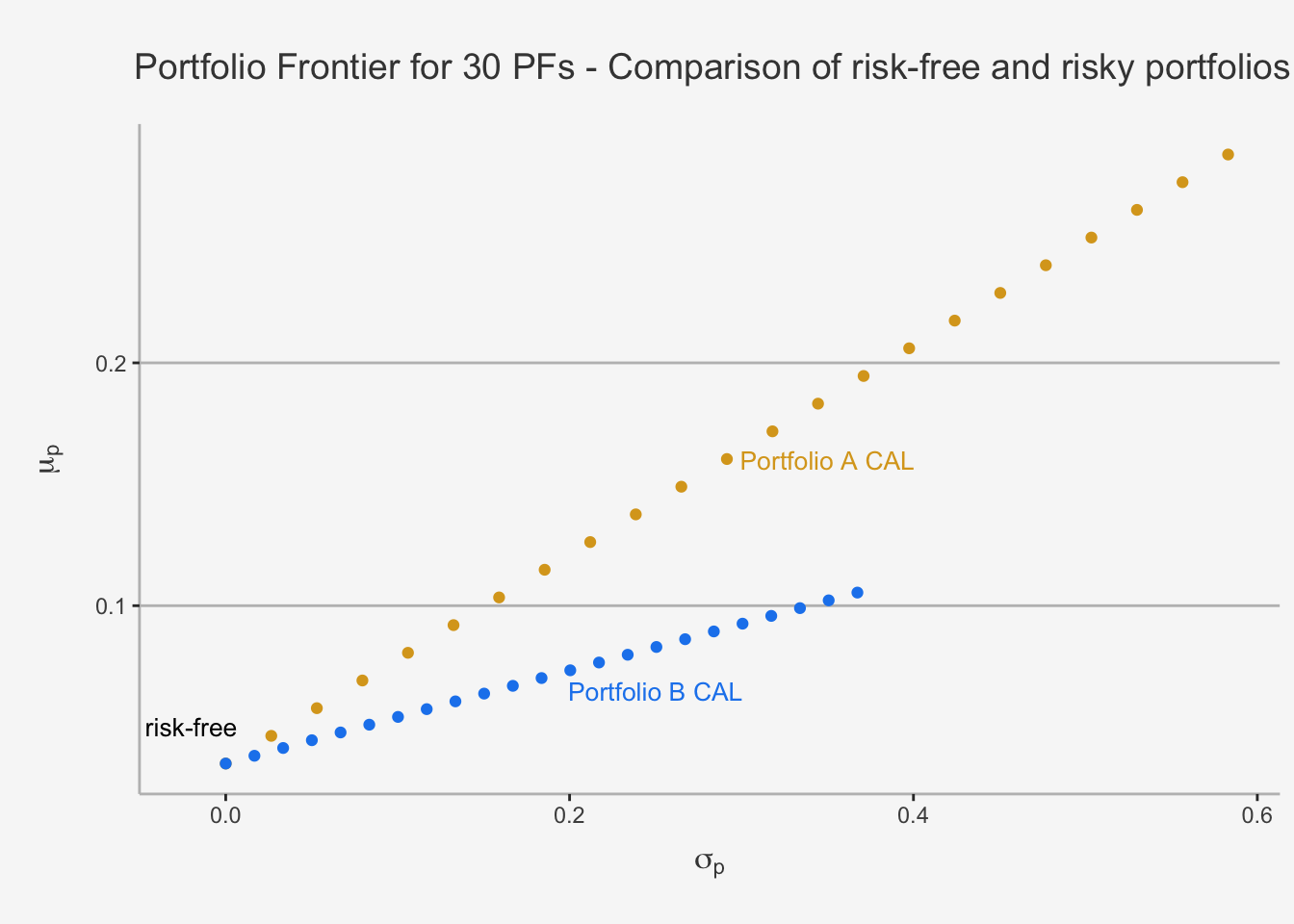

As we can see, the Slope of PF A is higher than the slope of PF B. Accordingly, for each unit of risk, we have a superior excess return profile. We can also quantify this by calculating the Sharpe Ratio directly. In our case, this is the following.

## [1] "The Sharpe Ratio of Asset A is 0.43 and the Sharpe Ratio of Asset B is 0.27"5.3 Markowitz Portfolio Theory with two Risky and a Risk-Free Asset

In a next step, we can try to quantify a portfolio consisting of three assets, whereas two are risky and one is risk-free. From earlier results we understand that any linear combination of two portfolios consisting of a risky and the same risk-free asset will also follow a linear slope. Given the two different CALs of portfolio A and B in the previous chapter, it is logical that a weighted average of both returns and risk will quantify the slope of the resulting portfolio. Consequently, the efficient set of portfolios will be a straight line with intercept in \(r_f\). Further, we need to understand whether a linear combination of the assets induces a more efficient portfolio, compared to if we would only invest in one risky asset and the risk-free. As such, we can derive that the three-piece portfolio must have the maximum slope while it still incorporates any linear combination of risky assets. Consequently, we need to solve for a constrained maximisation of the Sharpe Ratio to obtain the optimal, or most efficient, slope and derive the optimal weighting of the individual assets. In order to find this, we need to define the Tangency Portfolio.

5.3.1 Tangency Portfolio

The Tangency Portfolio represents the portfolio in which the CAL of the three-piece portfolio is exactly tangent to the set of differently weighted risky asset only portfolios. In essence, it is the portfolio which is a combination of the two risky and the risk-free assets such that it is in the set of feasible risky assets and maximises the slope of the CAL. It is the portfolio which has the best possible excess return per additional unit of risk. Geometrically speaking, this is the “steepest sloped”, or most efficient, portfolio combination we can attain conditional on the fact that the portfolio must consist of a linear combination of the assets under consideration. As a consequence, portfolios consisting of the risk-free asset as well as the tangency portfolio are the efficient portfolio consisting of risk-free and risky assets.

Let’s visually depict this in more detail below.

# First define some return and risk characteristics

mu_A = 0.149

sigma_A = 0.265

mu_B = 0.074

mu_B = 0.067

sigma_B = 0.167

mu_f = 0.035

sigma_f = 0

rho_Af = 0

sigma_Af = 0

rho_Bf = 0

sigma_Bf = 0

# Then, we also define a sequence of 30 portfolio weights for A and B

x_A = seq(from=-0.8, to=2.2, by=0.1)

x_f = 1 - x_A

# Create the expected return as well as the variance and standard deviation of each portfolios

mu_Af = x_A*mu_A + x_f*mu_f

var_Af = x_A^2*sigma_A^2 + x_f^2*sigma_f^2 + 2*x_A*x_f*sigma_Af

sd_Af = sqrt(var_Af)

# Do the same for PF B

x_B = seq(from=-0.8, to=2.2, by=0.1)

x_f = 1 - x_B

mu_Bf = x_B*mu_B + x_f*mu_f

var_Bf = x_B^2*sigma_B^2 + x_f^2*sigma_f^2 + 2*x_B*x_f*sigma_Bf

sd_Bf = sqrt(var_Bf)

# Create a data frame for the relationship

risk_return_df_rf <- as.data.frame(cbind(mu_Af, sd_Af, mu_Bf, sd_Bf, x_A, x_f))

colnames(risk_return_df_rf) <- c("Portfolio_Return_A", "Portfolio_Risk_A", "Portfolio_Return_B", "Portfolio_Risk_B", "Weight_A", "Weight_B")

# Subset with only efficient PFs

risk_return_df_rf_sub <- subset(risk_return_df_rf, Weight_A >= 0)

# Now, let's visualise the relationship

risk_return_df_rf_sub %>%

ggplot(aes(x= Portfolio_Risk_A, y = Portfolio_Return_A)) +

geom_point(color = "goldenrod") +

geom_point(data = risk_return_df, aes(x = Portfolio_Risk, y = Portfolio_Return)) +

geom_point(color = "dodgerblue2", aes(x= Portfolio_Risk_B, y = Portfolio_Return_B)) +

annotate('text',x = 0.35 ,y = 0.22, label = "Portfolio A CAL", size = 3.5, color = "goldenrod") +

annotate('text',x = 0.35 ,y = 0.08, label = "Portfolio B CAL", size = 3.5, color = "dodgerblue2") +

annotate('text',x = -0.02 ,y = 0.05, label = "risk-free", size = 3.5, color = "black") +

annotate('text',x = 0.55 ,y = 0.19, label = paste('Set of Risky Asset portfolios'), size = 3, color = "black") +

geom_point(data = subset(risk_return_df, Weight_A == 1), color = "springgreen4", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(risk_return_df, Weight_B == 1), color = "darkorchid1", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(risk_return_df, Weight_B == 0.7), color = "firebrick3", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

annotate('text',x = 0.22 ,y = 0.16, label = paste('PF of Asset A only'), size = 3, color = "springgreen4") +

annotate('text',x = 0.25 ,y = 0.065, label = paste('PF of Asset B only'), size = 3, color = "darkorchid1") +

annotate('text',x = 0.065 ,y = 0.09, label = paste('PF of Asset A and B'), size = 3, color = "firebrick3") +

ylab(expression(mu[p])) + xlab(expression(sigma[p])) + ggtitle("Efficient portfolios, risky asset portfolios and the CAL") +

labs(color='Factor Portfolios') +

theme(plot.title= element_text(size=14, color="grey26",

hjust=0.3,lineheight=2.4, margin=margin(15,0,15,0)),

panel.background = element_rect(fill="#f7f7f7"),

panel.grid.major.y = element_line(size = 0.5, linetype = "solid", color = "grey"),

panel.grid.minor = element_blank(),

panel.grid.major.x = element_blank(),

plot.background = element_rect(fill="#f7f7f7", color = "#f7f7f7"),

axis.title.y = element_text(color="grey26", size=12, margin=margin(0,10,0,10)),

axis.title.x = element_text(color="grey26", size=12, margin=margin(10,0,10,0)),

axis.line = element_line(color = "grey"))

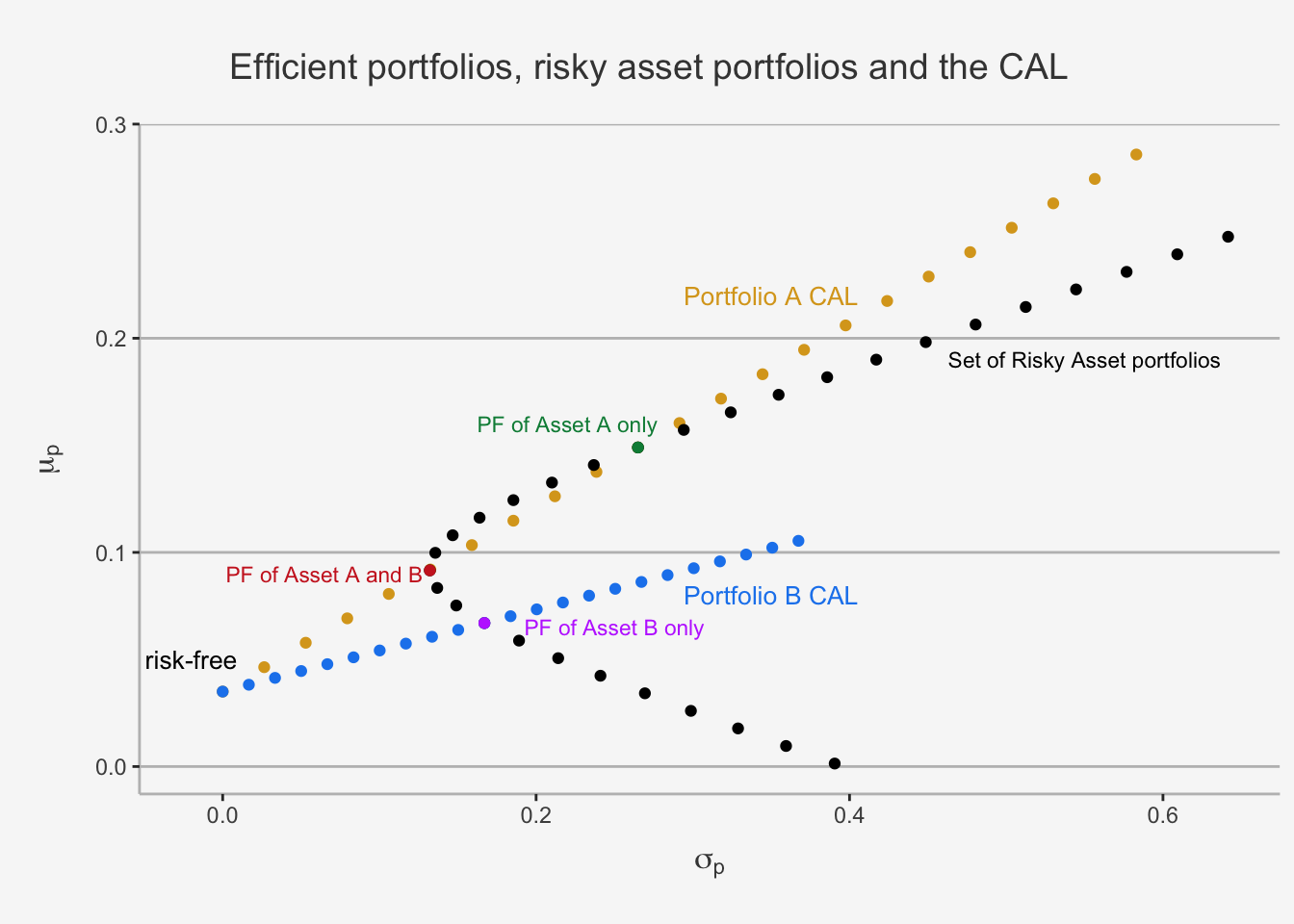

This shows well the constrained optimisation problem. In essence, we need to find a portfolio such that we can have the maximal slope of the CAL of the linear combination of the assets while still be in the set of feasible portfolios, which is defined by the set of risky asset portfolios. Consequently, the resulting portfolio must either cross or be tangent to the weighted risky asset portfolio path. As we can see, this is currently only the case for three weighted portfolios. The first is indicated with a purple dot, displaying the portfolio of only investing in Asset B. The second is the green dot, displaying the portfolio of only investing in Asset A. The third one is the red dot, displaying the portfolio for investing in both Asset A and B (In our case, this is a 30-70 split in Asset A and B, respectively). As we can already see graphically, the red and green portfolio are more efficient than the purple portfolio, and red and green have the same efficiency. We already calculated the individual Sharpe Ratios in the previous chapter.

## [1] "The Sharpe Ratio of Asset A of Asset AB is 0.43 and the Sharpe Ratio of Asset B is 0.27"However, we can further improve the slope of the CAL and thus induce a more efficient portfolio combination by only slightly “touching” the set of feasible risky assets. That is, we can find a slope such that we are tangent to this set. This can be achieved by solving for the tangency portfolio.

Mathematically, we do so by solving a constrained maximisation problem. This problem looks as follows:

\[ \begin{align} \max_{x_A, x_B} \frac{\mu_P - r_f}{\sigma_P} s.t. \\ \mu_P &= x_A\mu_A + x_B\mu_B \\ \sigma_P &= \sqrt{x_A^2\sigma_A^2 + x_B^2\sigma_B^2 + 2x_Ax_B\sigma_{AB}}\\ x_A + x_B &= 1 \end{align} \]

As we already did in the risky assets only case, we can now perform substitution of \(x_B = 1 - x_A\) and insert the conditions into the maximisation problem and then solve w.r.t \(x_A\). Without going into the derivation based on straight-forward calculus methods, when performing all these steps we obtain:

\[ \begin{align} x_A^{t} &= \frac{(\mu_A - r_f)\sigma_B^2 - (\mu_B - r_f)\sigma_{AB}}{(\mu_A - r_f)\sigma_B^2 + (\mu_B - r_f)\sigma_A^2 - (\mu_A + \mu_B - 2r_f)\sigma_{AB}} \\ x_B^{t} &= 1 - x_A^{t} \end{align} \]

With this formula, we obtain the optimal weights for both the risky assets such that we have a three-piece portfolio with the steepest efficient and attainable slope that maximises the excess return per additional unit of risk.

Let’s quickly calculate this.

# Calculate the tangency portfolio weights

x_t_A = ((mu_A - mu_f)*sigma_B^2 - (mu_B - mu_f)*sigma_AB) / ((mu_A - mu_f)*sigma_B^2 + (mu_B - mu_f)*sigma_A^2 - (mu_A + mu_B - 2*mu_f)*sigma_AB)

x_t_B = 1 - x_t_A

# Calculate the mean return and standard deviation

mu_t_AB <- x_t_A*mu_A + x_t_B*mu_B

sigma_t_AB <- sqrt(x_t_A^2*sigma_A^2 + x_t_B^2*sigma_B^2 + 2*x_t_A*x_t_B*sigma_AB)

# Calculate the Sharpe Ratio

SR_t_AB <- (mu_t_AB - mu_f) / sigma_t_AB

# Create a data frame for all three combinations

df_t_AB <- as.data.frame(cbind(x_t_A, x_t_B, mu_t_AB, sigma_t_AB, SR_t_AB))

colnames(df_t_AB) <- c("Weight A", "Weight B", "Expected Return", "Standard Deviation", "Sharpe Ratio")

df_A <- as.data.frame(cbind(1, 0, mu_A, sigma_A, SR_A))

colnames(df_A) <- c("Weight A", "Weight B", "Expected Return", "Standard Deviation", "Sharpe Ratio")

df_B <- as.data.frame(cbind(0, 1, mu_B, sigma_B, SR_B))

colnames(df_B) <- c("Weight A", "Weight B", "Expected Return", "Standard Deviation", "Sharpe Ratio")

# Combine the frame

df_total <- as.data.frame(rbind(round(df_t_AB,3), round(df_A,3), round(df_B, 3)))

rownames(df_total) <- c("Tangency PF", "PF A", "PF B")

# Show the results

df_total## Weight A Weight B Expected Return Standard Deviation Sharpe Ratio

## Tangency PF 0.535 0.465 0.111 0.152 0.499

## PF A 1.000 0.000 0.149 0.265 0.430

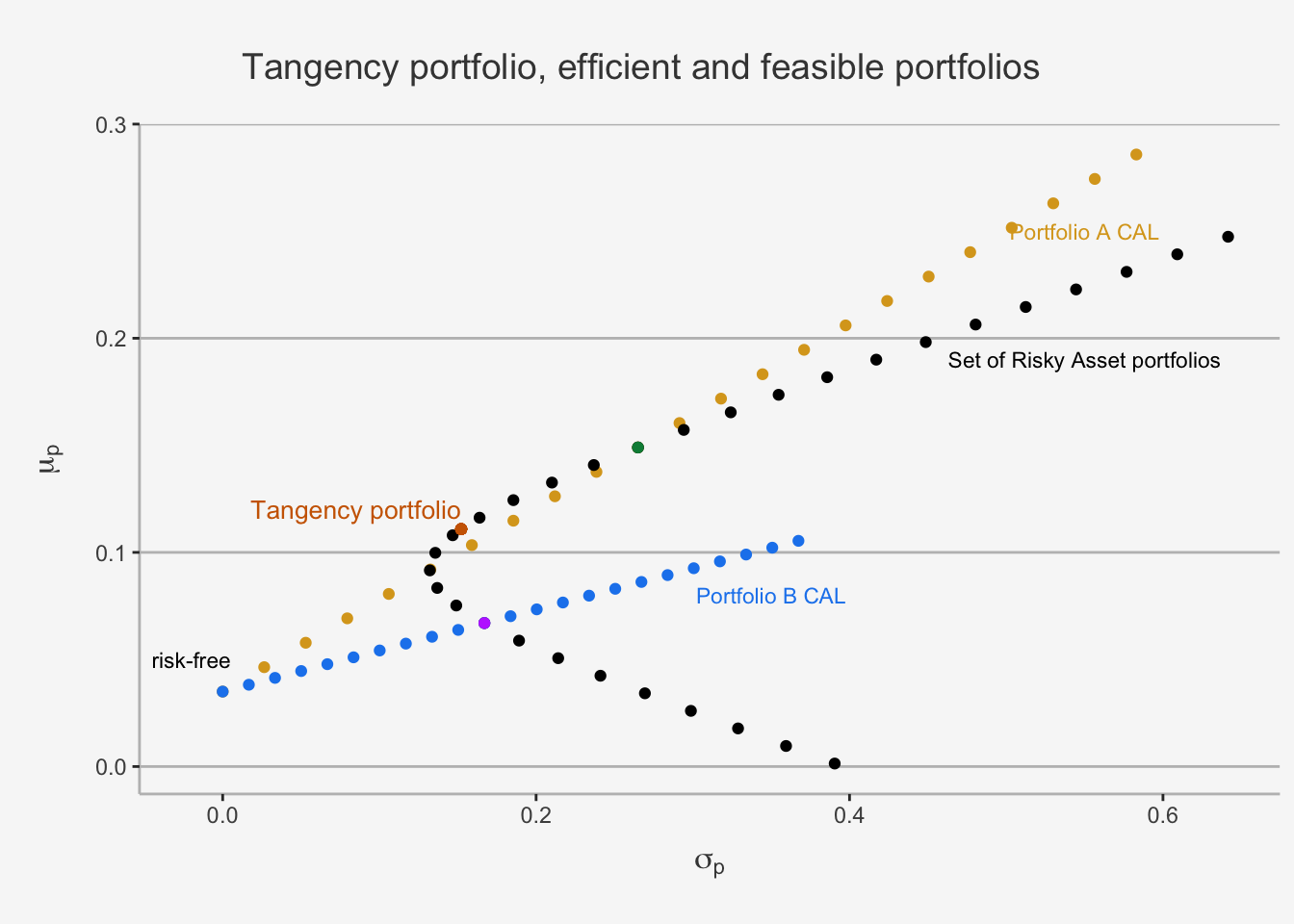

## PF B 0.000 1.000 0.067 0.167 0.192As we can see, a portfolio consisting of 53.5 % of Asset A and 46.5% of Asset B induces the highest Sharpe Ratio and thus has the steepest CAL slope. Consequently, this portfolio is feasible and efficient, as we can visualise below:

# First define some return and risk characteristics

mu_A = 0.149

sigma_A = 0.265

mu_B = 0.074

mu_B = 0.067

sigma_B = 0.167

mu_f = 0.035

sigma_f = 0

rho_Af = 0

sigma_Af = 0

rho_Bf = 0

sigma_Bf = 0

# Then, we also define a sequence of 30 portfolio weights for A and B

x_A = seq(from=-0.8, to=2.2, by=0.1)

x_f = 1 - x_A

# Create the expected return as well as the variance and standard deviation of each portfolios

mu_Af = x_A*mu_A + x_f*mu_f

var_Af = x_A^2*sigma_A^2 + x_f^2*sigma_f^2 + 2*x_A*x_f*sigma_Af

sd_Af = sqrt(var_Af)

# Do the same for PF B

x_B = seq(from=-0.8, to=2.2, by=0.1)

x_f = 1 - x_B

mu_Bf = x_B*mu_B + x_f*mu_f

var_Bf = x_B^2*sigma_B^2 + x_f^2*sigma_f^2 + 2*x_B*x_f*sigma_Bf

sd_Bf = sqrt(var_Bf)

# Create a data frame for the relationship

risk_return_df_rf <- as.data.frame(cbind(mu_Af, sd_Af, mu_Bf, sd_Bf, x_A, x_f))

colnames(risk_return_df_rf) <- c("Portfolio_Return_A", "Portfolio_Risk_A", "Portfolio_Return_B", "Portfolio_Risk_B", "Weight_A", "Weight_B")

# Subset with only efficient PFs

risk_return_df_rf_sub <- subset(risk_return_df_rf, Weight_A >= 0)

# Now, let's visualise the relationship

risk_return_df_rf_sub %>%

ggplot(aes(x= Portfolio_Risk_A, y = Portfolio_Return_A)) +

geom_point(color = "goldenrod") +

geom_point(data = risk_return_df, aes(x = Portfolio_Risk, y = Portfolio_Return)) +

geom_point(color = "dodgerblue2", aes(x= Portfolio_Risk_B, y = Portfolio_Return_B)) +

annotate('text',x = 0.55 ,y = 0.25, label = "Portfolio A CAL", size = 3, color = "goldenrod") +

annotate('text',x = 0.35 ,y = 0.08, label = "Portfolio B CAL", size = 3, color = "dodgerblue2") +

annotate('text',x = -0.02 ,y = 0.05, label = "risk-free", size = 3, color = "black") +

annotate('text',x = 0.55 ,y = 0.19, label = paste('Set of Risky Asset portfolios'), size = 3, color = "black") +

geom_point(data = subset(risk_return_df, Weight_A == 1), color = "springgreen4", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(risk_return_df, Weight_B == 1), color = "darkorchid1", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(aes(x=sigma_t_AB, y = mu_t_AB), color = "darkorange3") +

annotate('text',x = 0.085 ,y = 0.12, label = "Tangency portfolio", size = 3.5, color = "darkorange3") +

ylab(expression(mu[p])) + xlab(expression(sigma[p])) + ggtitle("Tangency portfolio, efficient and feasible portfolios") +

labs(color='Factor Portfolios') +

theme(plot.title= element_text(size=14, color="grey26",

hjust=0.3,lineheight=2.4, margin=margin(15,0,15,0)),

panel.background = element_rect(fill="#f7f7f7"),

panel.grid.major.y = element_line(size = 0.5, linetype = "solid", color = "grey"),

panel.grid.minor = element_blank(),

panel.grid.major.x = element_blank(),

plot.background = element_rect(fill="#f7f7f7", color = "#f7f7f7"),

axis.title.y = element_text(color="grey26", size=12, margin=margin(0,10,0,10)),

axis.title.x = element_text(color="grey26", size=12, margin=margin(10,0,10,0)),

axis.line = element_line(color = "grey"))

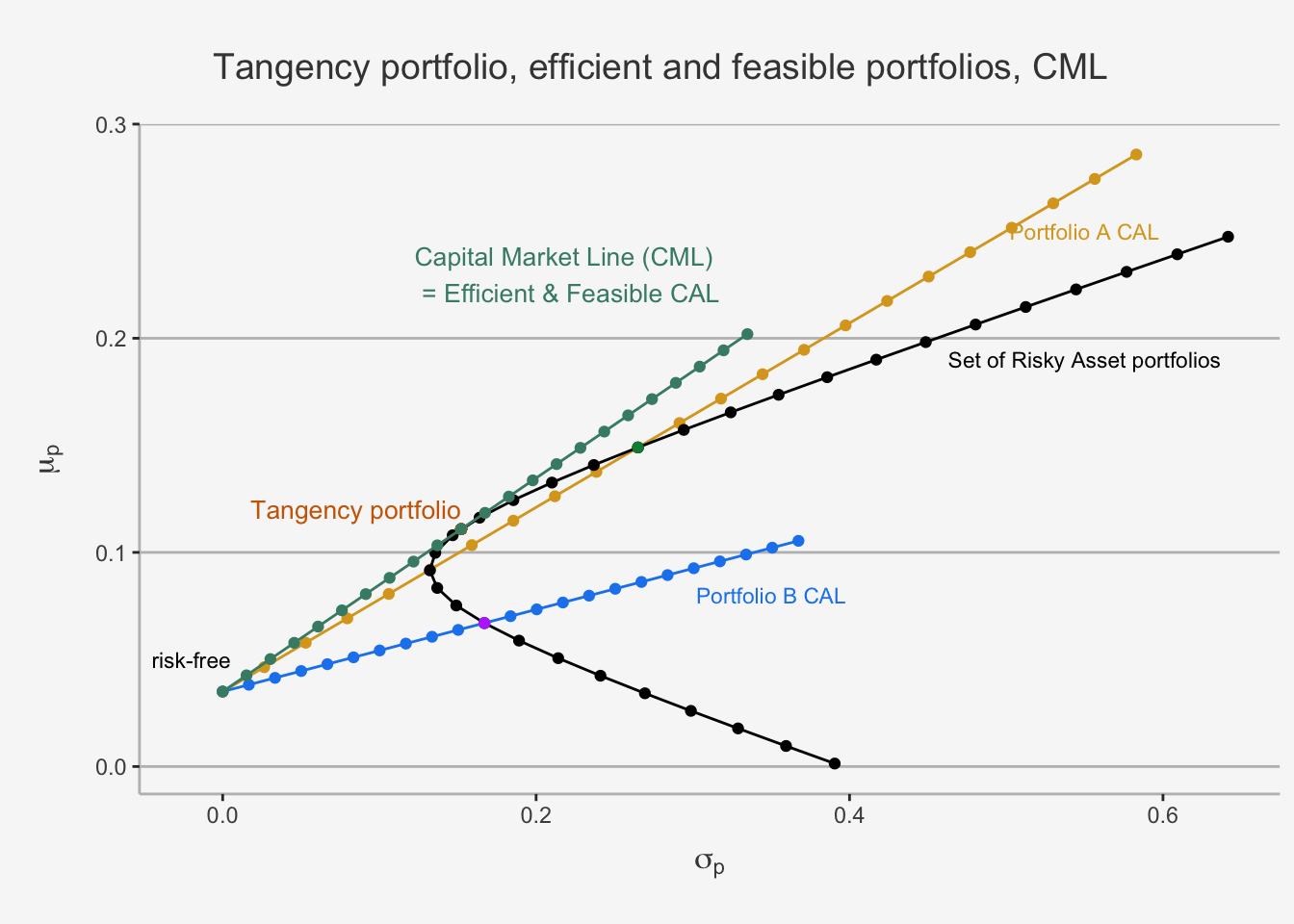

5.3.2 Mutual Fund Theorem and Derivation of the Capital Market Line (CML)

We now obtained a calculative method to get the weights of both risky assets such that we can maximise the sharpe ratio and obtain efficient and feasible portfolios. Now, we still need to incorporate the risk-free asset into our portfolio. We stated that the efficient portfolios are a combination of risk-free assets and the tangency portfolio. Consequently, they can lie on the line with intercept of the risk-free rate and slope of the Sharpe Ratio of the tangency portfolio. Thus, we can derive the formula for the efficient and feasible set of portfolios in the same way as we did for the usual CAL.

To do so, we first calculate the expected return as well as standard deviation of a portfolio consisting of the tangency portfolio and the risk-free asset.

\[ \begin{align} \mu_P^e &= x_{tan}\cdot \mu_{tan} + (1-x_{tan})\cdot r_f = r_f + x_{tan}\cdot (\mu_{tan} - r_f) \\ \sigma_P^e &= x_{tan}\cdot \sigma_{tan} \end{align} \]

whereas \(x_{tan}\) is the weight we want to invest in the tangency portfolio (similar to the CAL in which we defined the weight into the risky asset). In this case, the tangency portfolio can be considered as a mutual fund two risky assets.

The optimal allocation of risky and risk-free assets in this case depends on so-called investor preferences. If the investor is risk-averse, then she will choose portfolios with a low volatility, implying a larger investment share in the risk-free asset. If she is risk-seeking, then she will choose portfolios with a higher volatility, implying a larger investment share in the risky asset. However, all the weighted portfolios of the risk-free asset and the tangency portfolio are efficient and feasible.

The CAL which combines all these portfolios is also called the Capital Market Line (CML). The slope of the CML is the maximum Sharpe Ratio. Consequently, we can derive the expected return by combining both formulas.

\[ x_{tan} = \frac{\sigma_P^e} {\sigma_{tan}} \]

This implies again that the optimal weight is proportional to the relative standard deviations of the tangency portfolio (= optimal risky portfolio) and the overall portfolio. Substituting again gives us then the CML as:

\[ \mu_P^e = r_f + \frac{\sigma_P^e}{\sigma_{tan}} \cdot(\mu_{tan} - r_f) \]

In this case, we can again take the first derivative of the portfolio return, \(\mu_P^e\), w.r.t the risk of the portfolio, \(\sigma_P^e\), and obtain the slope of the efficient and feasible CAL, which is the Sharpe Ratio of the Tangency Portfolio:

\[ \frac{\delta \mu_P^e}{\delta \sigma_P^e} = SR_{tan} = \frac{\mu_{tan} - r_f}{\sigma_{tan}} \]

Similar to the CAL before, this is the excess return per additional unit of risk. In the case of the tangency portfolio, this is both efficient and feasible, implying that it has the best ratio of all weighted portfolios. This makes sense, given the theoretical foundations we discussed earlier.

# First define some return and risk characteristics

mu_A = 0.149

sigma_A = 0.265

mu_B = 0.074

mu_B = 0.067

sigma_B = 0.167

mu_f = 0.035

sigma_f = 0

rho_Af = 0

sigma_Af = 0

rho_Bf = 0

sigma_Bf = 0

# Then, we also define a sequence of 30 portfolio weights for A and B

x_A = seq(from=-0.8, to=2.2, by=0.1)

x_f = 1 - x_A

# Create the expected return as well as the variance and standard deviation of each portfolios

mu_Af = x_A*mu_A + x_f*mu_f

var_Af = x_A^2*sigma_A^2 + x_f^2*sigma_f^2 + 2*x_A*x_f*sigma_Af

sd_Af = sqrt(var_Af)

# Do the same for PF B

x_B = seq(from=-0.8, to=2.2, by=0.1)

x_f = 1 - x_B

mu_Bf = x_B*mu_B + x_f*mu_f

var_Bf = x_B^2*sigma_B^2 + x_f^2*sigma_f^2 + 2*x_B*x_f*sigma_Bf

sd_Bf = sqrt(var_Bf)

# Create the tangency portfolio

## Define the sequence

x_tan = seq(from=-0.8, to=2.2, by=0.1)

x_f = 1 - x_tan

## Define the expected return and std of the tangency pf

mu_tan <- df_t_AB$`Expected Return`

sigma_tan <- df_t_AB$`Standard Deviation`

## Calculate the metrics

mu_tanf = x_tan*mu_tan + x_f*mu_f

var_tanf = x_tan^2*sigma_tan^2 + x_f^2*sigma_f^2 + 2*x_tan*x_f*sigma_Af

sd_tanf = sqrt(var_tanf)

# Create a data frame for the relationship

risk_return_df_rf <- as.data.frame(cbind(mu_Af, sd_Af, mu_Bf, sd_Bf, mu_tanf, sd_tanf, x_A, x_f))

colnames(risk_return_df_rf) <- c("Portfolio_Return_A", "Portfolio_Risk_A", "Portfolio_Return_B", "Portfolio_Risk_B", "Portfolio_Return_Tangency_PF", "Portfolio_Risk_Tangency_PF", "Weight_A", "Weight_B")

# Subset with only efficient PFs

risk_return_df_rf_sub <- subset(risk_return_df_rf, Weight_A >= 0)

# Now, let's visualise the relationship

risk_return_df_rf_sub %>%

ggplot(aes(x= Portfolio_Risk_A, y = Portfolio_Return_A)) +

geom_point(color = "goldenrod") +

geom_path(color = "goldenrod") +

geom_point(data = risk_return_df, aes(x = Portfolio_Risk, y = Portfolio_Return)) +

geom_path(data = risk_return_df, aes(x = Portfolio_Risk, y = Portfolio_Return)) +

geom_point(color = "dodgerblue2", aes(x= Portfolio_Risk_B, y = Portfolio_Return_B)) +

geom_path(color = "dodgerblue2", aes(x = Portfolio_Risk_B, y = Portfolio_Return_B)) +

annotate('text',x = 0.55 ,y = 0.25, label = "Portfolio A CAL", size = 3, color = "goldenrod") +

annotate('text',x = 0.35 ,y = 0.08, label = "Portfolio B CAL", size = 3, color = "dodgerblue2") +

annotate('text',x = -0.02 ,y = 0.05, label = "risk-free", size = 3, color = "black") +

annotate('text',x = 0.55 ,y = 0.19, label = paste('Set of Risky Asset portfolios'), size = 3, color = "black") +

geom_point(data = subset(risk_return_df, Weight_A == 1), color = "springgreen4", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(risk_return_df, Weight_B == 1), color = "darkorchid1", aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(aes(x=sigma_t_AB, y = mu_t_AB), color = "darkorange3") +

annotate('text',x = 0.085 ,y = 0.12, label = "Tangency portfolio", size = 3.5, color = "darkorange3") +

geom_point(color = "aquamarine4", aes(x= Portfolio_Risk_Tangency_PF, y = Portfolio_Return_Tangency_PF)) +

geom_path(color = "aquamarine4", aes(x = Portfolio_Risk_Tangency_PF, y = Portfolio_Return_Tangency_PF)) +

annotate('text',x = 0.22 ,y = 0.23, label = "Capital Market Line (CML) \n = Efficient & Feasible CAL", size = 3.5, color = "aquamarine4") +

ylab(expression(mu[p])) + xlab(expression(sigma[p])) + ggtitle("Tangency portfolio, efficient and feasible portfolios, CML") +

labs(color='Factor Portfolios') +

theme(plot.title= element_text(size=14, color="grey26",

hjust=0.3,lineheight=2.4, margin=margin(15,0,15,0)),

panel.background = element_rect(fill="#f7f7f7"),

panel.grid.major.y = element_line(size = 0.5, linetype = "solid", color = "grey"),

panel.grid.minor = element_blank(),

panel.grid.major.x = element_blank(),

plot.background = element_rect(fill="#f7f7f7", color = "#f7f7f7"),

axis.title.y = element_text(color="grey26", size=12, margin=margin(0,10,0,10)),

axis.title.x = element_text(color="grey26", size=12, margin=margin(10,0,10,0)),

axis.line = element_line(color = "grey"))

The concept of the CML as efficient and feasible CAL is an important concept when considering asset returns in the case of the CAPM. The major difference is that

- the CAPM uses the systematic risk component (the covariance of the security with the market relative to the variance of the market) as factor which quantifies the excess return per additional unit of risk

- the CAPM uses a Market portfolio instead of the tangency portfolio to account for the risk associated.

However, we understand that a Market Portfolio is nothing else than a portfolio consisting of multiple assets. Consequently, before we can dive into the CAPM derivation, it is useful to consider MV optimisation cases in a general setting. That is, in settings in which we have N assets.

5.4 Markowitz Portfolio Theory with N Risky Assets

In this chapter, we generalise the mean-variance optimisation of Markowitz. That is, we allow to incorporate a non-sparse model configuration of N assets, whereas N can be any potentially large number. Such a generalisation allows us to assess the MV portoflio allocation in real-world settings, and to comprehend whether a general allocation technique follows the same principles as the two-asset case. Further, by introducing the general case we can extend the idea of Markowitz one step further and draw a market portfolio consisting of these N assets. This will become especially handy when trying to bridge the idea of MV optimising investors related to systematic and idiosyncratic risk components, one of the foundations of the Capital Asset Pricing Model (CAPM).

However, generalising the two asset case into N assets poses mathematical difficulties. For instance when working with large portfolios, the algebraic representation of portfolios becomes quite burdensome and heavy to comprehend. However, as we have seen in the matrix algebra repetition, the use of linear algebra manipulations can greatly simplify the calculations in larger dimensional spaces. Further, they allow for an efficient computation of the portfolios, as they use faster paths to perform the respective operations (such as matrix multiplications). As such, they both facilitate improved views and speed to the portfolio construction. As a consequence, when considering general cases of portfolio construction, we will work with linear algebra.

5.4.1 The theoretical foundations of N Assets

We start again with the basic configuration of any portfolio. That is, we assume that the returns are IID and follow an approximately normal distribution, as we already did in the last chapter on the SIM. Further, we consider a portfolio consisting of N assets, whereas N can be any arbitrary number (e.g. 100’000). Moreover, \(R_i\) is the return of asset i. In this case, we assume that the following holds for each asset i:

\[ \begin{align} R_i &\sim N(\mu_i, \sigma_i^2)\\ cov(R_i,R_j) &= \sigma_{ij} \end{align} \]

For any portfolio consisting of the N assets, we assume that the following holds:

\[ \begin{align} \mu_p &= \sum_{i=1}^Nx_i\mu_i\\ \sigma_p^2 &= \sum_{i=1}^Nx_i^2\sigma_i^2 + 2\sum_{i=1}^n\sum_{i \neq j}x_ix_j\sigma_{ij} \\ \sum_{i=1}^N x_i &= 1 \end{align} \]

whereas \(x_i\) represents the weights allocated to asset i, which is not constrained to short-selling. That is, we allow for \(x_i\) to also include negative weights. We will extend this idea later on.

As we have seen, the variance of the portfolio depends on:

- N individual variance terms

- N(N-1) individual covariance terms

To illustrate this. A portfolio consisting of 100’000 assets has a variance consisting of N = 100’000 variance terms and N(N-1) = 100’000*99’999 = 9’999’900’000 covariance terms. You already notice that trying to write this down in non matrix notation is quite impossible.

5.4.1.1 Repetition of the Matrix Notation

Let’s quickly recap the main portfolio moments in matrix notation.

We first define the return structure. To do so, we create a \(N \times 1\) vector containing the asset returns as well as a \(N \times 1\) vector of weights for any period. That is:

\[ \textbf{R} = \begin{pmatrix} R_1 \\ \vdots \\ R_N \end{pmatrix}, \textbf{x} = \begin{pmatrix} x_1 \\ \vdots \\ x_N \end{pmatrix} \]

As we understand it, the vector is a random vector. We know that the probability distribution of any random vector is just the joint probability distribution of its individual constituents. Further, we know that a linear transformation of this vector still follows the same, linearly adjusted, joint probability distribution. As the Markowitz model assumes joint normality and since this distribution is characterised entirely by its mean, variance and covariance properties, we can easily express the entire framework with two matrices.

First, let’s define the matrix of expected returns.

\[ \textbf{R} = E\left[\begin{pmatrix} R_1 \\ \vdots \\ R_N \end{pmatrix}\right] = \begin{pmatrix} E[R_1] \\ \vdots \\E[R_N] \end{pmatrix} = \begin{pmatrix} \mu_1 \\ \vdots \\ \mu_N \end{pmatrix} = \mu \]

Then, we can define the \(N \times N\) variance-covariance matrix.

\[ \begin{align} var(\textbf{R}) &= \begin{pmatrix} var(R_1) & cov(R_1,R_2) & \dots & cov(R_1, R_N) \\ cov(R_2, R_1) & var(R_2) & \dots & cov(R_2, R_N) \\ \vdots & \vdots & \ddots & \vdots \\ cov(R_N, R_1) & cov(R_N, R_2) & \dots & var(R_N) \end{pmatrix} \\ &= \begin{pmatrix} \sigma_1^2 & \sigma_{12} & \dots & \sigma_{1N} \\ \sigma_{21} & \sigma_2^2 & \dots & \sigma_{2N} \\ \vdots & \vdots & \ddots & \vdots \\ \sigma_{N1} & \sigma_{N2} & \dots & \sigma_N^2 \end{pmatrix}\\ &= \Sigma \end{align} \]

which is a symmetric and positive definite matrix given that no perfect multicollinearity exists an no random variable is a constant.

Based on these two formulations, we can now calculate the expected portfolio return and variance.

The expected portfolio return is calculated as the matrix product of the weights and the expected returns :

\[ \mu_P = E[\textbf{x}'\textbf{R}] = \textbf{x}'\mu = \begin{pmatrix} x_1 & \dots & x_N \end{pmatrix} \cdot \begin{pmatrix} \mu_1 \\ \vdots \\ \mu_N \end{pmatrix} = x_1\mu_1 + \dots + x_N\mu_N \]

Also, we have shown that the variance of a linear combination of random vectors \(var(\textbf{x}'\textbf{R})\) can be written as \(\textbf{x}'\Sigma\textbf{x}\). \[ \begin{align} var(\textbf{x}'\textbf{R}) = \textbf{x}'\Sigma\textbf{x} &= \begin{pmatrix} x_1 & \dots & x_N \end{pmatrix} \cdot \begin{pmatrix} \sigma_1^2 & \sigma_{12} & \dots & \sigma_{1N} \\ \sigma_{21} & \sigma_2^2 & \dots & \sigma_{2N} \\ \vdots & \vdots & \ddots & \vdots \\ \sigma_{N1} & \sigma_{N2} & \dots & \sigma_N^2 \end{pmatrix} \cdot \begin{pmatrix} x_1 \\ \vdots \\ x_N \end{pmatrix} \\ &= \sum_{i=1}^Nx_i^2\sigma_i^2 + 2\sum_{i=1}^n\sum_{i \neq j}x_ix_j\sigma_{ij} \end{align} \]

Lastly, the covariance of the returns on portfolio x and portfolio y can be calculated according to the repetition on the covariance between linear combination of two random vectors. For instance, if we have portfolio x and y with different weights such that \(x \neq y\), the transformation property allows us to calculate their portfolio covariance matrix as:

\[ \begin{align} var(\textbf{x}'\textbf{R}) = \textbf{x}'\Sigma\textbf{y} &= \begin{pmatrix} x_1 & \dots & x_N \end{pmatrix} \cdot \begin{pmatrix} \sigma_1^2 & \sigma_{12} & \dots & \sigma_{1N} \\ \sigma_{21} & \sigma_2^2 & \dots & \sigma_{2N} \\ \vdots & \vdots & \ddots & \vdots \\ \sigma_{N1} & \sigma_{N2} & \dots & \sigma_N^2 \end{pmatrix} \cdot \begin{pmatrix} y_1 \\ \vdots \\ y_N \end{pmatrix} \end{align} \]

5.4.1.2 Application to the Swiss Market Index

We can now start working with real-world portfolio applications.

A1 <- read.csv("~/Desktop/Master UZH/Data/A1_dataset_01_Ex_Session.txt", header = T, sep = "\t", dec = '.')

date = as.Date(A1[,1])

# Here, we first assign a date format to the date variable, otherwise the xts package cannot read it.

# Other forms of transformation (as.POSIXct etc.) would certainly also work.

A1ts <- xts(x = A1[,-1], order.by = date)

A1_return <- Return.calculate(A1ts, method = 'discrete') # Calculation of Returns

A1_returnts <- xts(x = A1_return, order.by = date)

# Only take some of the companies

A6_ts_ret <- A1_returnts[, c("ABB", "Actelion", "Adecco", "Credit_Suisse_Group", "Compagnie_Financiere_Richemont", "Geberit", "Givaudan", "Julius_Baer_Group", "LafargeHolcim", "Nestle_PS", "Novartis_N", "Roche_Holding", "SGS", "The_Swatch_Group_I", "Swiss_Re", "Swisscom", "Syngenta", "Transocean", "Zurich_Insurance_Group_N")][-1,]['2013-01-31/2016-12-31']

# Calculate the mean vector and covariance matrix

mean_ret <- colMeans(A6_ts_ret, na.rm = T)

cvar_ret <- cov(na.omit(A6_ts_ret))

# Calculate individual weights

for (i in colnames(A6_ts_ret)[-19]){

weight = runif(10000, min=-1.5, max=1.5)

names = paste0(i,"_weight")

if (i == "ABB"){

weight_final = weight

names_final = names

}

else {

weight_final = cbind(weight_final, weight)

names_final = cbind(names_final, names)

}

}

# Get the dataframe and matrix on the weights

weight_df <- as.data.frame(weight_final)

colnames(weight_df) <- names_final

weight_df$sum <- rowSums(weight_df)

weight_df$Zurich_Insurance_Group_N <- 1 - weight_df$sum

weight_df$sum <- NULL

matrix_weights <- as.matrix(weight_df)

# Calculate the feasible expected returns and standard deviations

feasible_pf_mu = matrix_weights%*%mean_ret

feasible_pf_sd = apply(matrix_weights, 1, function(x) sqrt(t(x) %*% cvar_ret %*% x))

# Construct the feasible dataframe, consisting of 100 differently weighted risk and return combinations

feasible_pf <- as.data.frame(cbind(feasible_pf_mu, feasible_pf_sd))

colnames(feasible_pf) <- c("Portfolio_Return", "Portfolio_Risk")

# Now, let's visualise the relationship

feasible_pf %>%

ggplot(aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(color = "grey") +

geom_point(data = subset(feasible_pf, Portfolio_Risk <= 0.12 & Portfolio_Return >= 0), color = "darkorchid3", shape = 1, aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(feasible_pf, Portfolio_Risk > 0.12 & Portfolio_Risk <= 0.14 & Portfolio_Return >= 0.07), color = "darkorchid3", shape = 1,aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(feasible_pf, Portfolio_Risk > 0.14 & Portfolio_Risk <= 0.16 & Portfolio_Return >= 0.09), color = "darkorchid3", shape = 1,aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(feasible_pf, Portfolio_Risk > 0.16 & Portfolio_Risk <= 0.18 & Portfolio_Return >= 0.1), color = "darkorchid3", shape = 1,aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(feasible_pf, Portfolio_Risk > 0.18 & Portfolio_Risk <= 0.20 & Portfolio_Return >= 0.11), color = "darkorchid3",shape = 1, aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(feasible_pf, Portfolio_Risk > 0.2 & Portfolio_Risk <= 0.22 & Portfolio_Return >= 0.11), color = "darkorchid3", shape = 1, aes(x= Portfolio_Risk, y = Portfolio_Return)) +

ylab(expression(mu[p])) + xlab(expression(sigma[p])) + ggtitle("Porfolio Frontier of SMI PF for 10'000 different weights") +

labs(color='Factor Portfolios') +

theme(plot.title= element_text(size=14, color="grey26",

hjust=0.3,lineheight=2.4, margin=margin(15,0,15,0)),

panel.background = element_rect(fill="#f7f7f7"),

panel.grid.major.y = element_line(size = 0.5, linetype = "solid", color = "grey"),

panel.grid.minor = element_blank(),

panel.grid.major.x = element_blank(),

plot.background = element_rect(fill="#f7f7f7", color = "#f7f7f7"),

axis.title.y = element_text(color="grey26", size=12, margin=margin(0,10,0,10)),

axis.title.x = element_text(color="grey26", size=12, margin=margin(10,0,10,0)),

axis.line = element_line(color = "grey"))

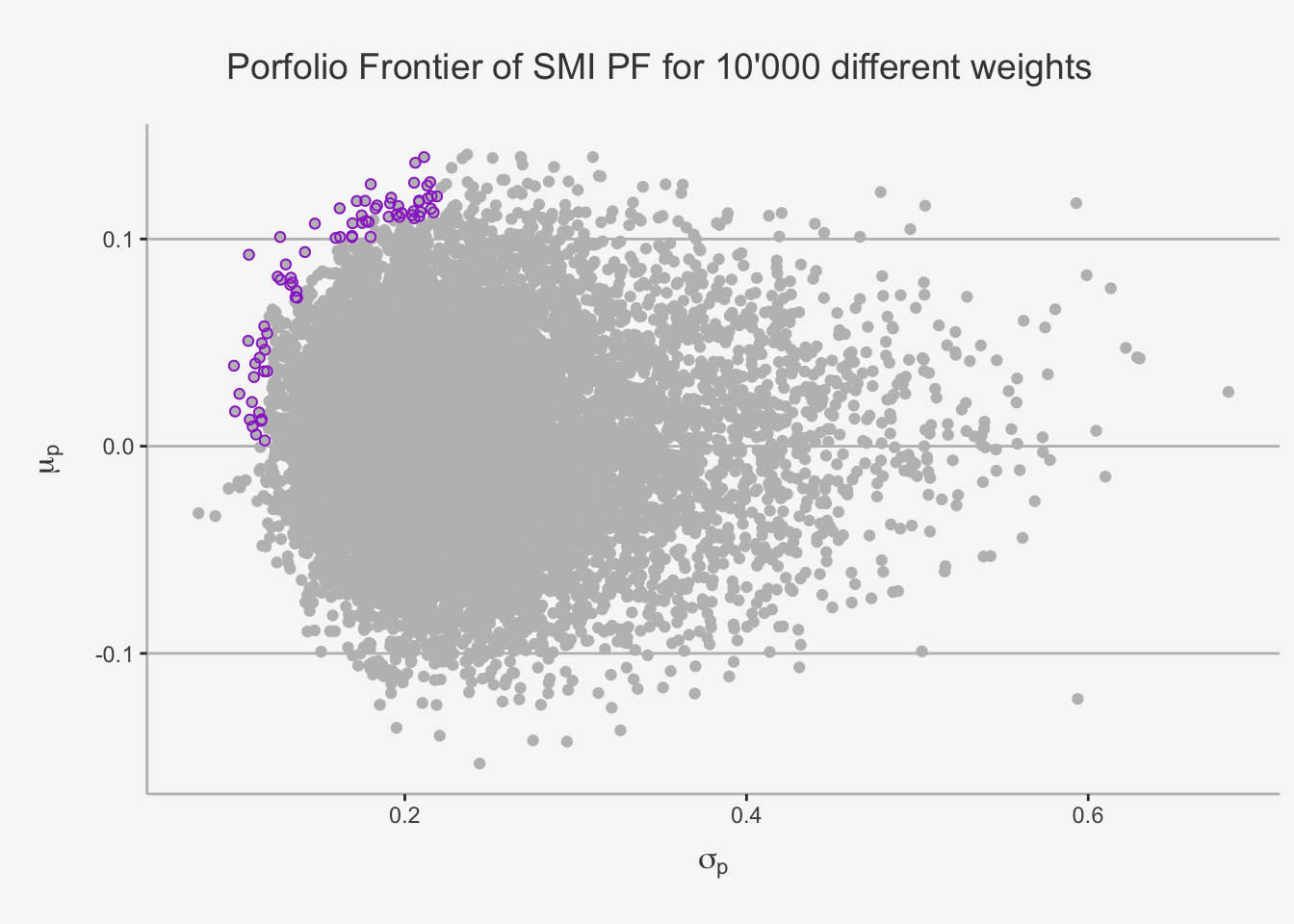

When we introduce a large number (e.g. 10’000) different weightings for a portfolio consisting of a large fraction of Swiss bluechip companies, then we can see that the outlines are similar to the Markowitz Bullet we encountered in the two-asset case. That is, the outer boundaries of this combination appear to be similar to the set of feasible portfolios when only considering two assets, with the tip of the distribution representing the Minimum Variance portfolio. Further, recall that, in the case of a bullet shape, the set of efficient and feasible portfolios when considering only risky assets is represented by the outer boundary of portfolios at or above the minimum-variance portfolio, as they deliver the smallest possible risk for each unit of return. These portfolios are represented by the purple bounded dots.

The results show that a portfolio consisting of N assets follows approximately the same intuition and distribution as we have observed in the two-asset case, which is handy for generalisation purposes.

5.4.2 The Minimum Variance Portfolio for N assets

Next, we start to define efficient portfolios by first determining the constitution of weights for the minimum-variance portfolio. As we said, we can do this by following some matrix algebra properties that are also given in the repetition part.

Note again that the definition of the minimum-variance portfolio is a constrained optimisation problem. That is, we want to find a weight vector such that the variance of the portfolio is minimised, conditional that all weights add up to 1.

That is, we define again the variance-covariance matrix and matrix-multiply it with its respective, optimal weights, to obtain a scalar which quantifies the smallest possible standard deviation.

We do this by defining the following problem:

\[ \begin{align} \min_{x} \sigma_p^2 = \textbf{x'}\Sigma\textbf{x} && \text{ s.t. } \textbf{x'}\textbf{1} = 1 \end{align} \] In order to solve this, we need to make use of the Lagrangian formula. The Lagrangian is a commonly used tool to define constrained optimisation problems. In essence, we define two functions in which the constraint is set equal to zero. That is, we define the following:

\[ L(\textbf{x}, \lambda) = \textbf{x'}\Sigma\textbf{x} + \lambda(\textbf{x'}\textbf{1} - 1) \]

We now take the FOC’s for both x and \(\lambda\) and obtain:

\[ \begin{align} \frac{\delta L} {\delta \textbf{x}} &= 2\Sigma\textbf{x} + \lambda1 \\ \frac{\delta L} {\delta \lambda} &= \textbf{x'}\textbf{1} - 1 \end{align} \]

We can represent this is vector notation as:

\[ \begin{pmatrix} 2\Sigma & \textbf{1} \\ \textbf{1'} & 0 \end{pmatrix} \begin{pmatrix} \textbf{x} \\ \lambda \end{pmatrix} = \begin{pmatrix} 0 \\ 1 \end{pmatrix} \]

This is nothing else than backinduction of the usual matrix multiplication:

\[ \begin{align} 2\Sigma \cdot \textbf{x} + \lambda\cdot \textbf{1} &= 0\\ \textbf{1'}\cdot \textbf{x} + 0\cdot \lambda &= 1 \end{align} \]

If you don’t know anymore what we did here, please refer to Chapter 3.2.4 (System of linear equations).

In general, this implies \(\textbf{A} \cdot \textbf{z} = \textbf{b}\), where:

\[ \textbf{A} = \begin{pmatrix} 2\Sigma & \textbf{1} \\ \textbf{1'} & 0 \end{pmatrix}, \textbf{z} = \begin{pmatrix} \textbf{x} \\ \lambda \end{pmatrix}, \textbf{b} = \begin{pmatrix} 0 \\ 1 \end{pmatrix} \]

Note that we are interested in the weights for this system of linear equations. That is, we want to find out the vector of \(\textbf{x}\), as these minimise the global variance of our portfolio. In order to retrieve these weights, it is quite straight-forward to see that we need to take the inverse the matrix \(\textbf{A}\) and multiply it with the matrix \(\textbf{b}\). This is only feasible if the matrix \(\textbf{A}\) is invertible. As long as we can determine the elements in \(\textbf{A}^{-1}\), then we can solve for the values of x in the linear equations system of the vector \(\textbf{z}\). In this case, the first N elements of the vector \(\textbf{z}\) will be the variance minimising weights.

Let’s do this for our portfolio:

# Define the matrix A. It consists of:

## - the covariance matrix multiplied by two

## - a column right to the covariance matrix, consisting of 1's

## - a row right below the covariance matrix and the additional column, consisting of 1's and one zero (the zero is in the right-bottom of the resulting matrix)

mat_A <- rbind(cbind(2*cvar_ret, rep(1, dim(cvar_ret)[1])), c(rep(1, dim(cvar_ret)[1]), 0))

# Define the vector b as vector of zeros with dimension of the covariance matrix (19 in this case) and one 1 at the bottom

vec_b <- c(rep(0, dim(cvar_ret)[1]), 1)

# Calculate the inverse and perform matrix multiplication to get the vector z

z <- solve(mat_A)%*%vec_b

# Derive the first N elements of the vector to retrieve the actual values

x_MV <- z[1:dim(cvar_ret)[1]]

# Check that the sum adds up to 1

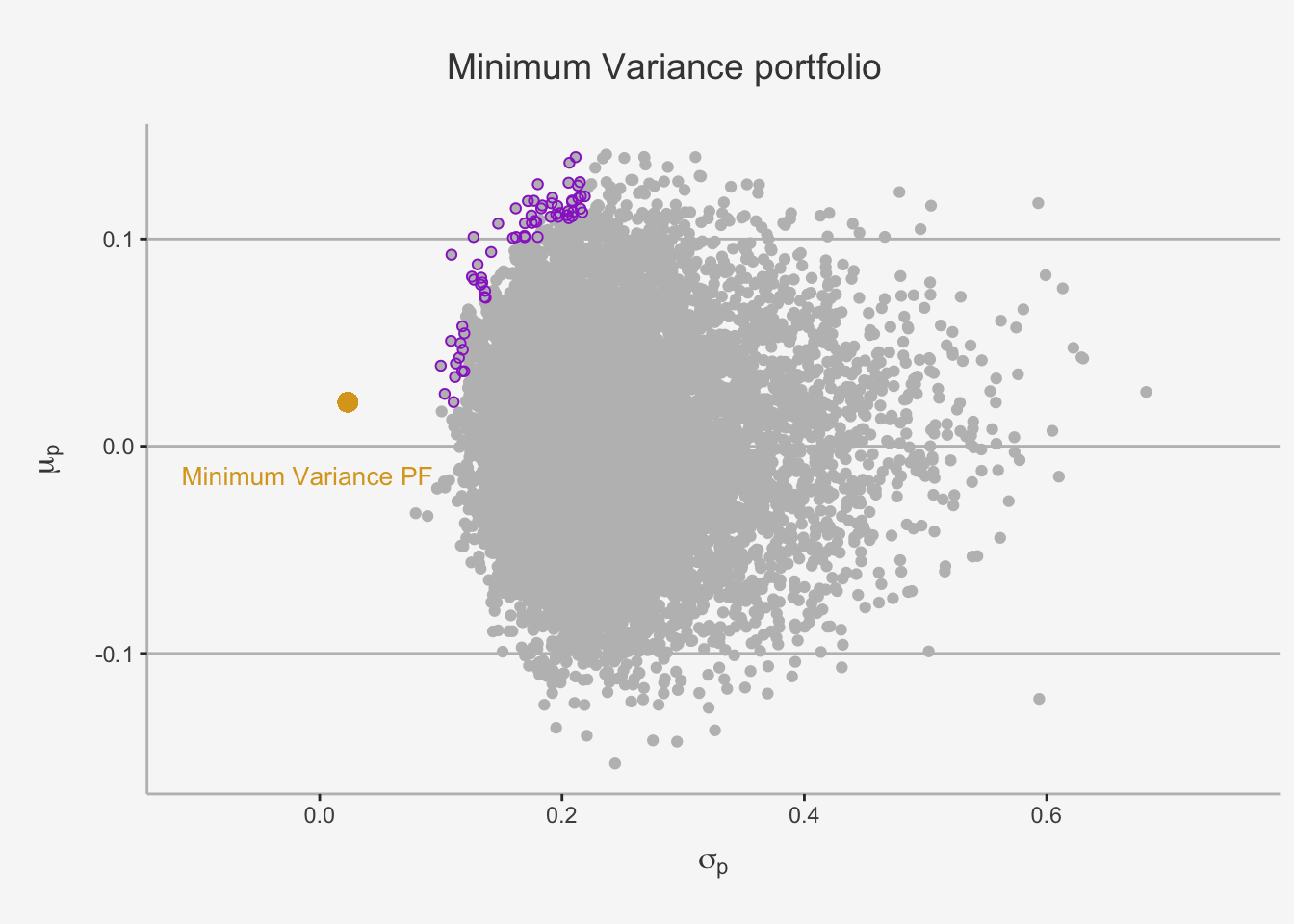

sum(x_MV)## [1] 1Now, we got the appropriate weights per company of our portfolio. Let’s calculate the expected return as well as the standard deviation.

# Calculate the expected return:

mu_MV <- x_MV %*% mean_ret

sd_MV <- sqrt(t(x_MV) %*% cvar_ret %*% x_MV)

# Create the appropriate dataframe

MV_PF <- as.data.frame(cbind(mu_MV, sd_MV, t(x_MV)))

colnames(MV_PF) <- c("Mu_MV", "Sd_MV",names_final, "Zurich_Insurance_Group_Weight")

as.data.frame(t(MV_PF))## V1

## Mu_MV 0.02126306

## Sd_MV 0.02321974

## ABB_weight 0.09286278

## Actelion_weight 0.15521845

## Adecco_weight 0.46741548

## Credit_Suisse_Group_weight -0.21374129

## Compagnie_Financiere_Richemont_weight 0.07482529

## Geberit_weight 0.18564346

## Givaudan_weight -0.10473719

## Julius_Baer_Group_weight 0.16461766

## LafargeHolcim_weight -0.11800013

## Nestle_PS_weight 0.38496885

## Novartis_N_weight 0.32945099

## Roche_Holding_weight 0.21882863

## SGS_weight 0.14659400

## The_Swatch_Group_I_weight -0.44283485

## Swiss_Re_weight 0.01692448

## Swisscom_weight 0.09185468

## Syngenta_weight -0.01166684

## Transocean_weight -0.02403974

## Zurich_Insurance_Group_Weight -0.41418471# Now, let's visualise the relationship

feasible_pf %>%

ggplot(aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(color = "grey") +

# This is just to colour in the "optimal PFs"

geom_point(data = subset(feasible_pf, Portfolio_Risk <= 0.12 & Portfolio_Return >= 0.02), color = "darkorchid3", shape = 1, aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(feasible_pf, Portfolio_Risk > 0.12 & Portfolio_Risk <= 0.14 & Portfolio_Return >= 0.07), color = "darkorchid3", shape = 1,aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(feasible_pf, Portfolio_Risk > 0.14 & Portfolio_Risk <= 0.16 & Portfolio_Return >= 0.09), color = "darkorchid3", shape = 1,aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(feasible_pf, Portfolio_Risk > 0.16 & Portfolio_Risk <= 0.18 & Portfolio_Return >= 0.1), color = "darkorchid3", shape = 1,aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(feasible_pf, Portfolio_Risk > 0.18 & Portfolio_Risk <= 0.20 & Portfolio_Return >= 0.11), color = "darkorchid3",shape = 1, aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(feasible_pf, Portfolio_Risk > 0.2 & Portfolio_Risk <= 0.22 & Portfolio_Return >= 0.11), color = "darkorchid3", shape = 1, aes(x= Portfolio_Risk, y = Portfolio_Return)) +

# Calculate and plot the Minimum Variance PF

geom_point(color = "goldenrod", aes(x= MV_PF$Sd_MV, y = MV_PF$Mu_MV), size = 3) +

annotate('text',x = -0.01 ,y = -0.014, label = "Minimum Variance PF", size = 3.5, color = "goldenrod") +

ylab(expression(mu[p])) + xlab(expression(sigma[p])) + ggtitle("Minimum Variance portfolio") +

labs(color='Factor Portfolios') +

xlim(-0.10, 0.75) +

theme(plot.title= element_text(size=14, color="grey26",

hjust=0.43,lineheight=2.4, margin=margin(15,0,15,0)),

panel.background = element_rect(fill="#f7f7f7"),

panel.grid.major.y = element_line(size = 0.5, linetype = "solid", color = "grey"),

panel.grid.minor = element_blank(),

panel.grid.major.x = element_blank(),

plot.background = element_rect(fill="#f7f7f7", color = "#f7f7f7"),

axis.title.y = element_text(color="grey26", size=12, margin=margin(0,10,0,10)),

axis.title.x = element_text(color="grey26", size=12, margin=margin(10,0,10,0)),

axis.line = element_line(color = "grey"))

5.4.3 The Efficient Frontier for N assets

5.4.3.1 Determining Efficient Portfolios

Now that we were able to determine the global minimum variance portfolio, we can go a step further and define the set of efficient portfolios under the assumption of N risky assets. In order to determine the set of risky assets that are efficient, we can follow the same strategy as we already did with 2 assets.

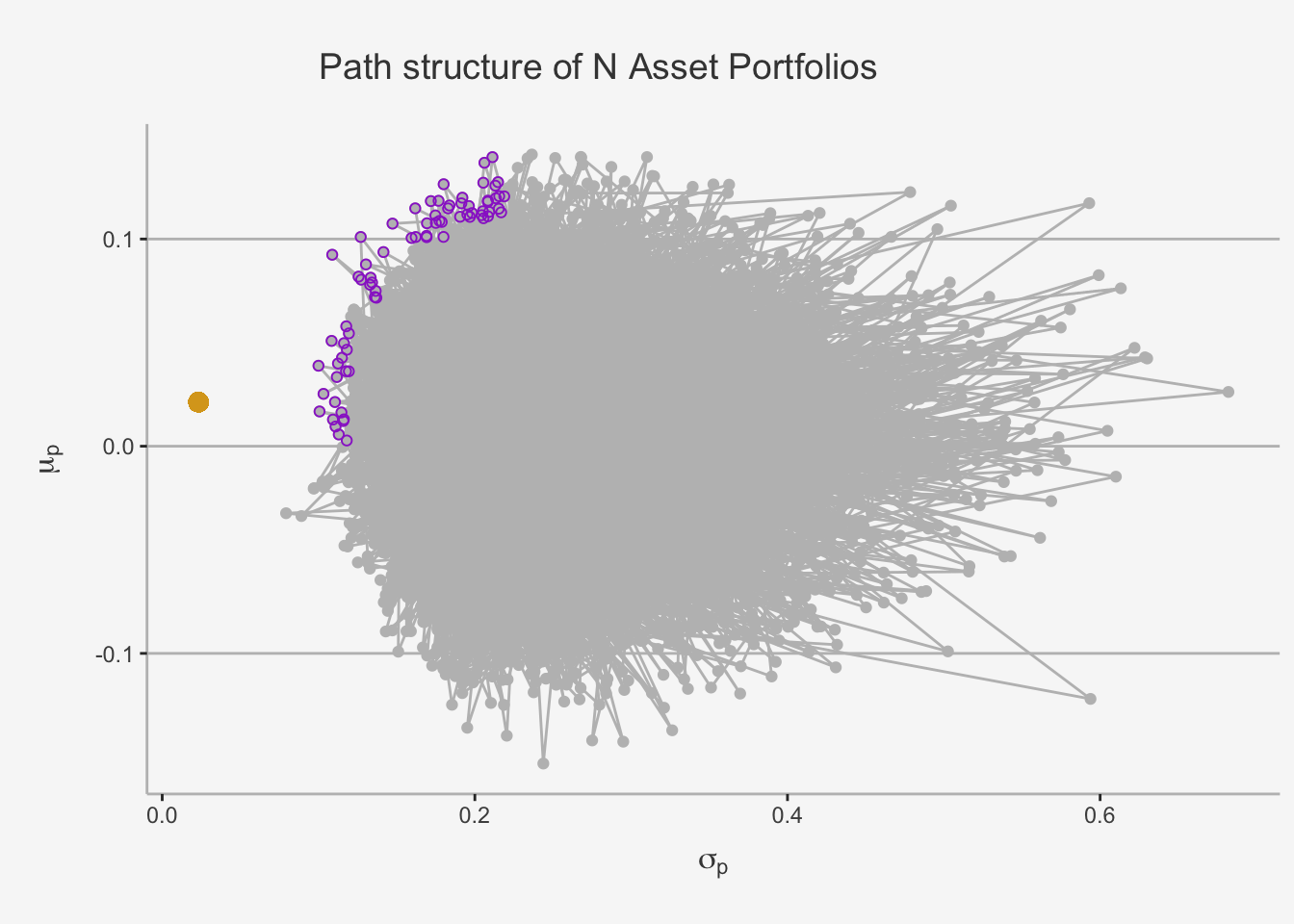

In the case of 1 or 2 assets, the investment opportunity set is graph or a hyperbola (bullet), respectively. However, in the N assets case, the opportunity set is given by values that do not follow a clear trend. This is due to the fact that their risk properties largely depend solely on the covariance between the assets (remember the proof we made in the risk and return chapter?). In essence, when we try to plot a graph as we did in the 2 asset case, this is the result due to the covariances \(\sigma_{ij}\).

In the N asset case, we understand that deriving a clear structure that incorporates the position and behaviour of each constituent portfolio, as the covariance properties do not allow for any clearly visible trends. However, as we stated earlier, we do not need to care about every single relation within our portfolio set. Under the assumption that investors want to maximise return given a level of risk or, equivalently, minimise risk given a level of return, we can simplify the asset allocation to only incorporate the set of efficient and feasible portfolios, which are characterised by the purple dots. As it is depicted graphically, the efficient portfolios are the most outer portfolios at or above the minimum variance portfolio. S

imilar to the two-asset case, these are Analogously, these are the portfolios that, for a given level of return, induce the lowest risk.

In essence, we need to find the portfolios with the best possible risk-return trade-off. We can define these portfolios throughout a constrained optimisation problem in two distinct ways. First, we need to find the portfolios that, for a given level of risk, induce the highest return. This is the case when:

\[ \begin{align} \max_x \mu_P = \textbf{x'}\mu && \text{s.t. } \sigma_P^2 = \textbf{x'}\Sigma\textbf{x} = \sigma_{P, Spec}^2\text{ and } \textbf{x'}1 = 1 \end{align} \] whereas \(\sigma_{P, Spec}\) defines a given, specific level of risk.

Second, we need to find the portfolios that, for a given level of return, induce the lowest risk. This is the case when:

\[ \begin{align} \min_x \sigma_P^2 = \textbf{x'}\Sigma\textbf{x} && \text{s.t. } \mu_{P}^2 = \textbf{x'}\mu = \mu_{P,Spec} \text{ and } \textbf{x'}1 = 1 \end{align} \]

whereas \(\mu_{P, Spec}\) defines a given, specific level of return.

In reality, we often solve for the second constrained optimisation problem, because investors can better define given levels of return than given levels of risk. Just as in the two asset case, the resulting efficient frontier will resemble one side of an hyperbola and is often called the “Markowitz bullet”.

As such, we can solve the optimisation problem again with the Lagrangian function. That is, we get:

\[ L(x, \lambda_1, \lambda_2) = \textbf{x'}\Sigma\textbf{x} + \lambda_1(\textbf{x'}\mu - \mu_{P,Spec}) + \lambda_2(\textbf{x'}1 - 1) \]

We can solve this problem by taking three partial derivatives w.r.t. their individual components:

\[ \begin{align} \frac{\delta L}{\delta x} &= 2\Sigma\textbf{x} + \lambda_1\mu + \lambda_2\textbf{1} = 0 \\ \frac{\delta L}{\delta \lambda_1} &= \textbf{x'}\mu - \mu_{P,Spec} = 0 \\ \frac{\delta L}{\delta \lambda_2} &= \textbf{x'}1 - 1 = 0 \end{align} \]

Again, we can represent this system of linear equations as follows:

$$ {} {} = _{}

$$

Now, we can again take the inverse of \(\textbf{A}\) and calculate the vector \(\textbf{z}\) through matrix multiplication. Lastly, we take the first N elements from this vector to obtain the weights that minimise the risk of a portfolio for a given level of expected return.

Let’s now try to replicate this idea for the underlying portfolio. Note that in order to be able to solve this portfolio, we need to specify a given level of return first which then can be used to minimise the risk associated.

Let’s make this easy in our case. Let’s just say we want to have the return of the company Givaudan the portfolio under consideration.

# We first define again the Matrix A

mat_A_EF <- rbind(cbind(2*cvar_ret, mean_ret, rep(1,dim(cvar_ret)[1])),

cbind(t(mean_ret), 0, 0),

cbind(t(rep(1,dim(cvar_ret)[1])), 0, 0))

# Then, we define the vector b

## Define the EW return

mu_spec <- mean_ret[7]

## Define the vector b

vec_b_EF <- c(rep(0, dim(cvar_ret)[1]), mu_spec, 1)

# Now, we can solve for the respective weights

z_EF <- solve(mat_A_EF)%*%vec_b_EF

# Then, we take the first N elements to get the respective weights

x_EF <- z_EF[1:dim(cvar_ret)[1]]

# Check that the sum adds up to 1

sum(x_EF)## [1] 1Perfect. We now can take these weights again and calculate the respective risk for the given level of return.

# Sanity Check: See if the matrix multiplication of the weights and the mean returns indeed gives us the average return again

ifelse(round(x_EF%*%mean_ret, 4) == round(mu_spec, 4), "The Sanity Check passes as we get that the expected return is equal to the specified return!", 0)## [,1]

## [1,] "The Sanity Check passes as we get that the expected return is equal to the specified return!"# Now, let's calculate the risk

sd_EF <- sqrt(t(x_EF) %*% cvar_ret %*% x_EF)

# Further, calculate the risk under the EW strategy

EW_weights <- rep(1/dim(cvar_ret)[1], dim(cvar_ret)[1])

sd_EW <- sqrt(t(EW_weights) %*% cvar_ret %*% EW_weights)

# Now, we can take these results and compare them with each other:

EF_df <- as.data.frame(cbind(round(mu_spec,4), round(sd_EF,4), round(sd_EW,4)))

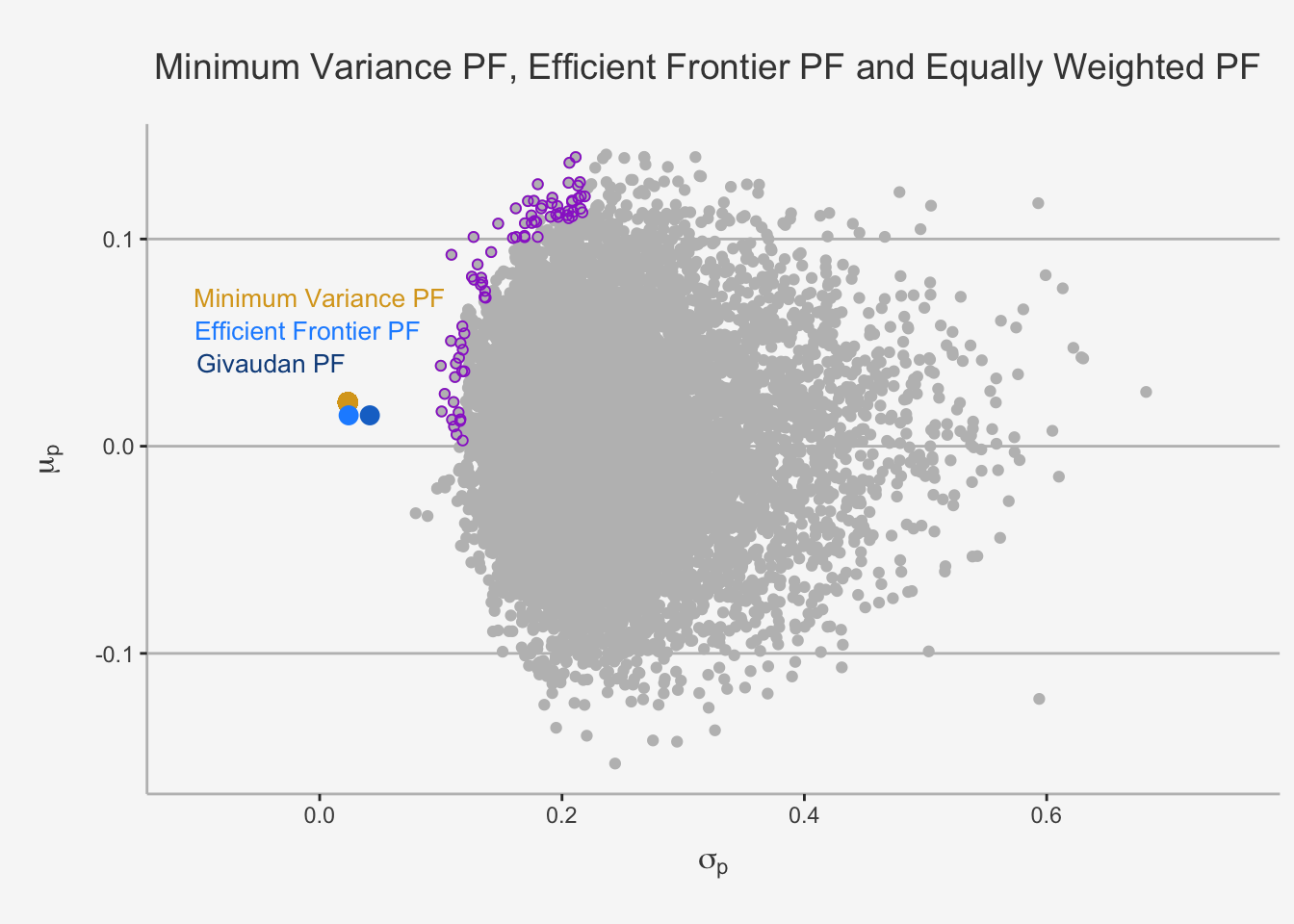

colnames(EF_df) <- c("Return EW PF", "Risk Optimised", "Risk EW PF")

EF_df## Return EW PF Risk Optimised Risk EW PF

## Givaudan 0.0149 0.0239 0.0414Great, as we can see, we can greatly improve the risk associated with a given level of return.

# Now, let's visualise the relationship

feasible_pf %>%

ggplot(aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(color = "grey") +

# This is just to colour in the "optimal PFs"

geom_point(data = subset(feasible_pf, Portfolio_Risk <= 0.12 & Portfolio_Return >= 0), color = "darkorchid3", shape = 1, aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(feasible_pf, Portfolio_Risk > 0.12 & Portfolio_Risk <= 0.14 & Portfolio_Return >= 0.07), color = "darkorchid3", shape = 1,aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(feasible_pf, Portfolio_Risk > 0.14 & Portfolio_Risk <= 0.16 & Portfolio_Return >= 0.09), color = "darkorchid3", shape = 1,aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(feasible_pf, Portfolio_Risk > 0.16 & Portfolio_Risk <= 0.18 & Portfolio_Return >= 0.1), color = "darkorchid3", shape = 1,aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(feasible_pf, Portfolio_Risk > 0.18 & Portfolio_Risk <= 0.20 & Portfolio_Return >= 0.11), color = "darkorchid3",shape = 1, aes(x= Portfolio_Risk, y = Portfolio_Return)) +

geom_point(data = subset(feasible_pf, Portfolio_Risk > 0.2 & Portfolio_Risk <= 0.22 & Portfolio_Return >= 0.11), color = "darkorchid3", shape = 1, aes(x= Portfolio_Risk, y = Portfolio_Return)) +

# Calculate and plot the Minimum Variance PF

geom_point(color = "goldenrod", aes(x= MV_PF$Sd_MV, y = MV_PF$Mu_MV), size = 3) +

# Calculate and plot the optimal PF for a given level of risk

geom_point(data = subset(EF_df, `Return EW PF` == 0.0149 & `Risk Optimised` == 0.0239), color = "dodgerblue1", size = 3, aes(x=`Risk Optimised` , y= `Return EW PF`)) +

geom_point(data = subset(EF_df, `Return EW PF` == 0.0149 & `Risk EW PF` == 0.0414), color = "dodgerblue3", size = 3, aes(x=`Risk EW PF` , y= `Return EW PF`)) +

annotate('text',x = 0.0 ,y = 0.072, label = "Minimum Variance PF", size = 3.5, color = "goldenrod") +

annotate('text',x = -0.01 ,y = 0.056, label = "Efficient Frontier PF", size = 3.5, color = "dodgerblue1") +

annotate('text',x = -0.04 ,y = 0.040, label = "Givaudan PF", size = 3.5, color = "dodgerblue4") +

ylab(expression(mu[p])) + xlab(expression(sigma[p])) + ggtitle("Minimum Variance PF, Efficient Frontier PF and Equally Weighted PF") +

labs(color='Factor Portfolios') +

xlim(-0.10, 0.75) +

theme(plot.title= element_text(size=14, color="grey26",

hjust=0.3,lineheight=2.4, margin=margin(15,0,15,0)),

panel.background = element_rect(fill="#f7f7f7"),

panel.grid.major.y = element_line(size = 0.5, linetype = "solid", color = "grey"),

panel.grid.minor = element_blank(),

panel.grid.major.x = element_blank(),

plot.background = element_rect(fill="#f7f7f7", color = "#f7f7f7"),

axis.title.y = element_text(color="grey26", size=12, margin=margin(0,10,0,10)),

axis.title.x = element_text(color="grey26", size=12, margin=margin(10,0,10,0)),

axis.line = element_line(color = "grey"))

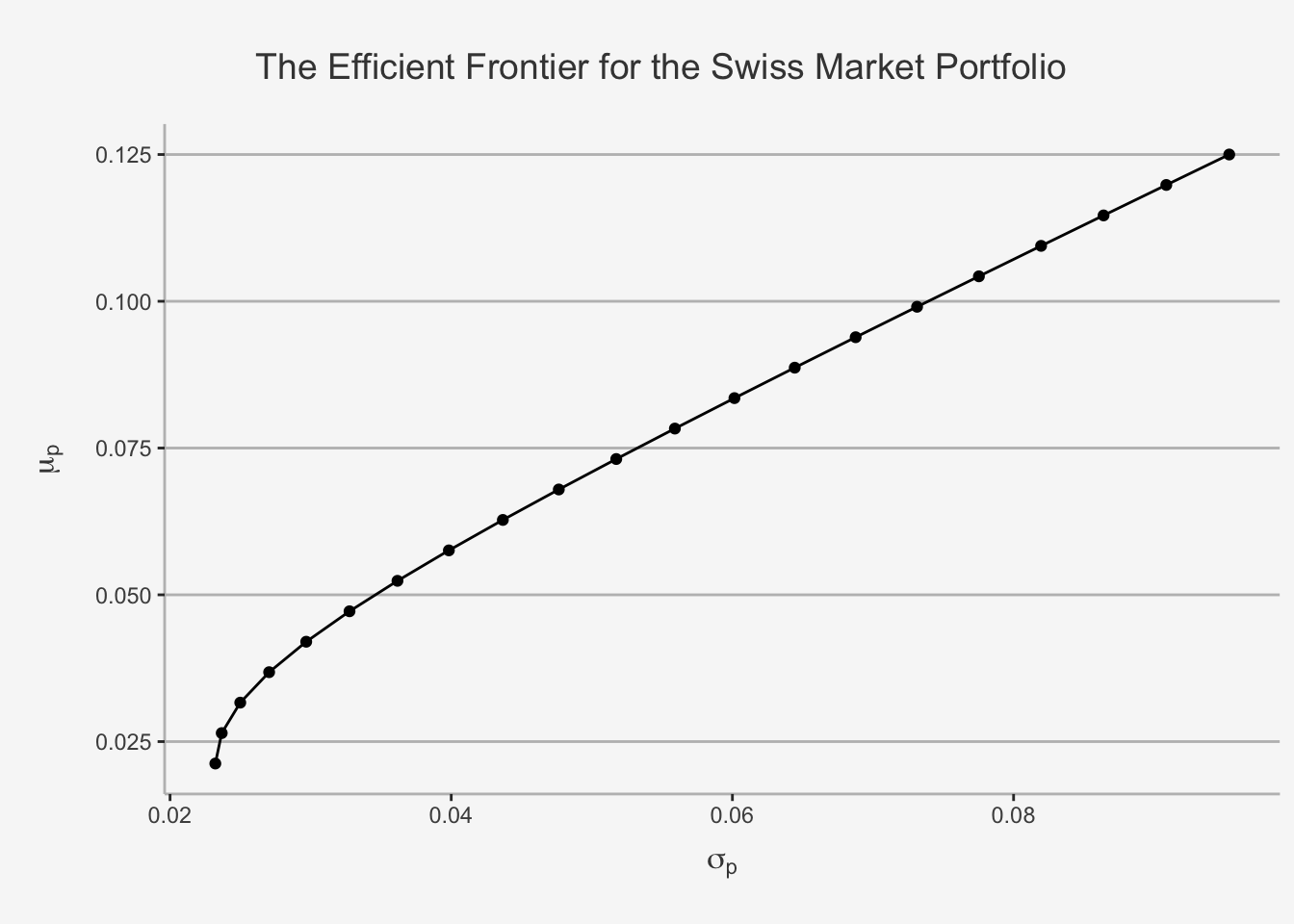

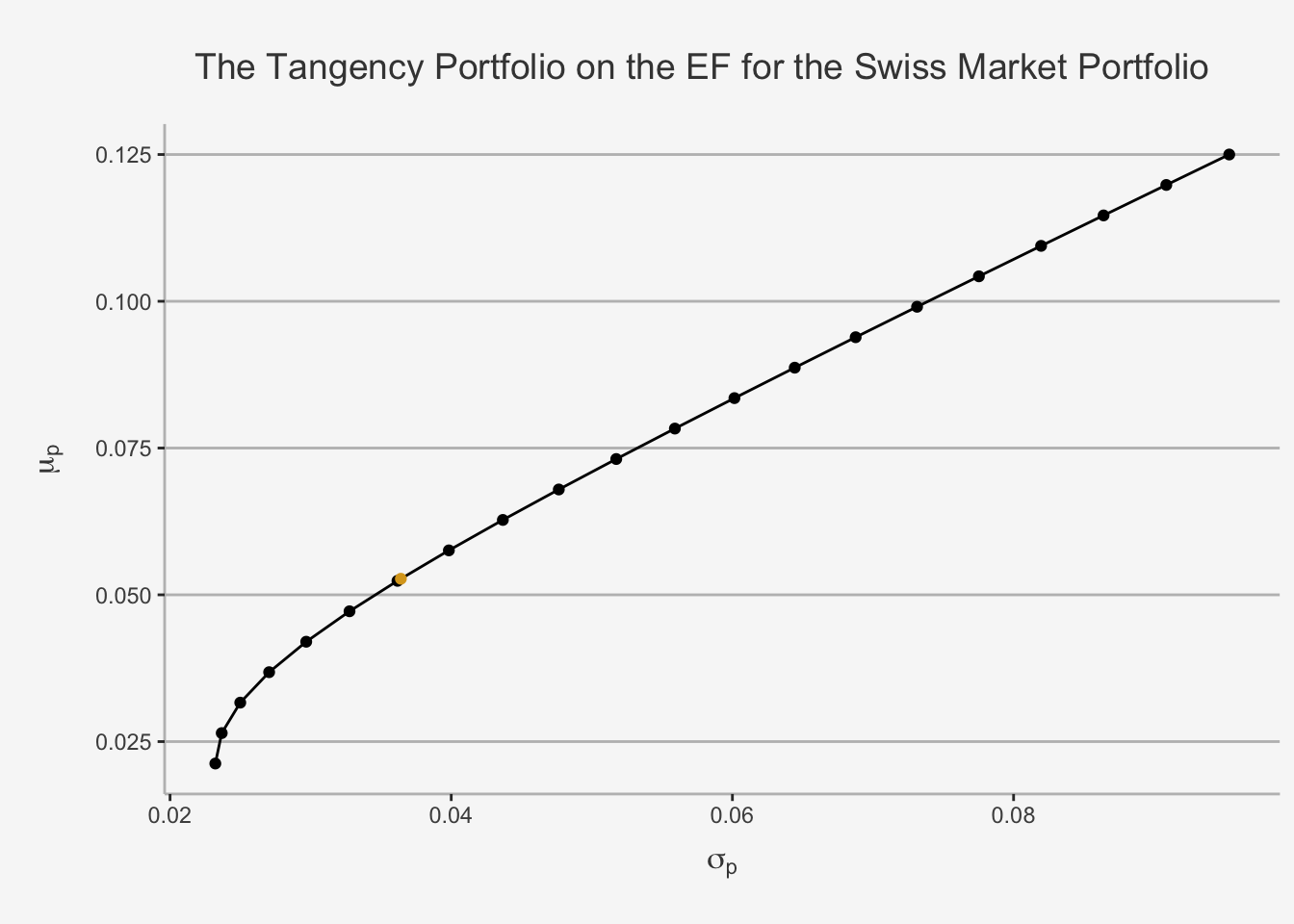

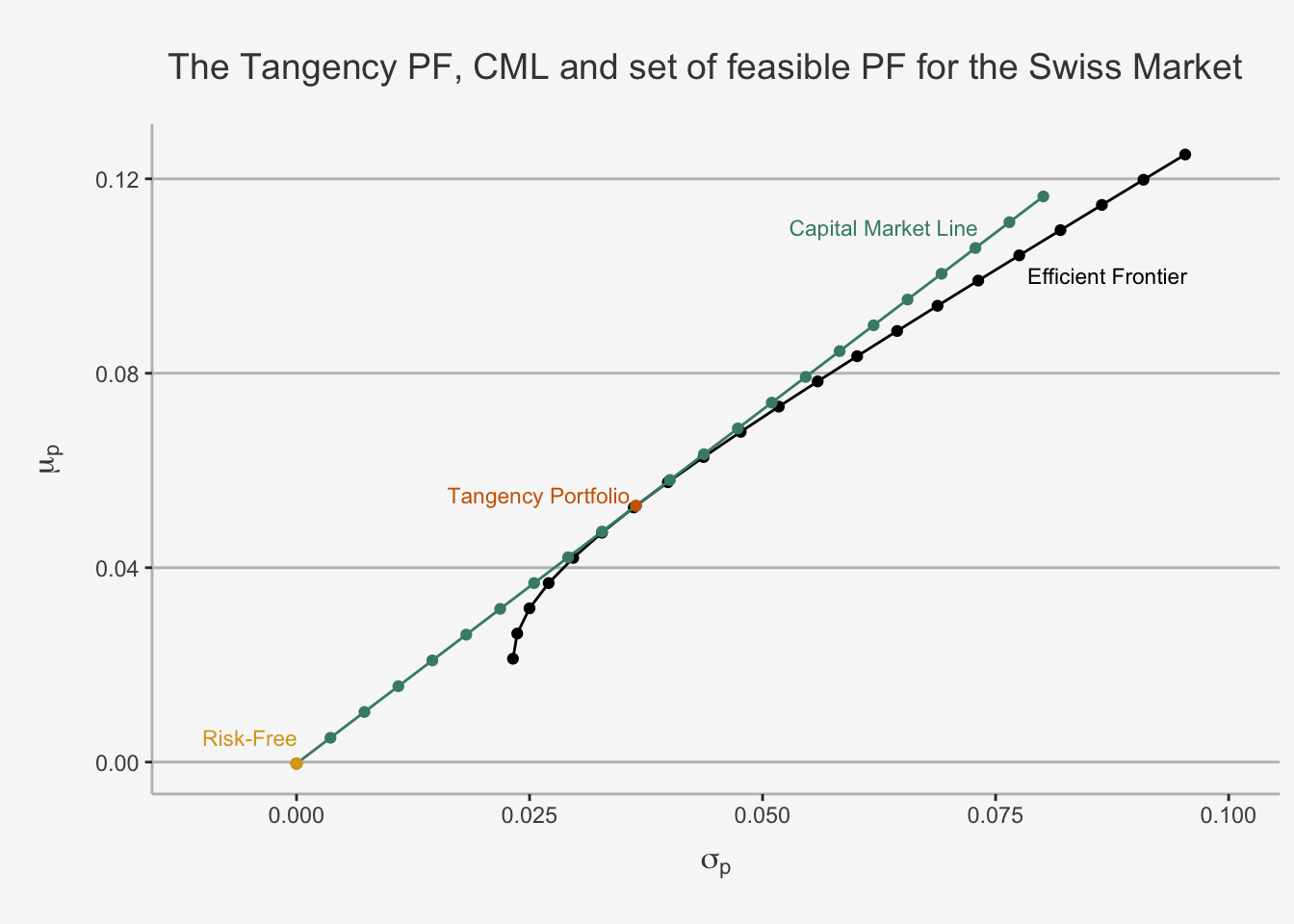

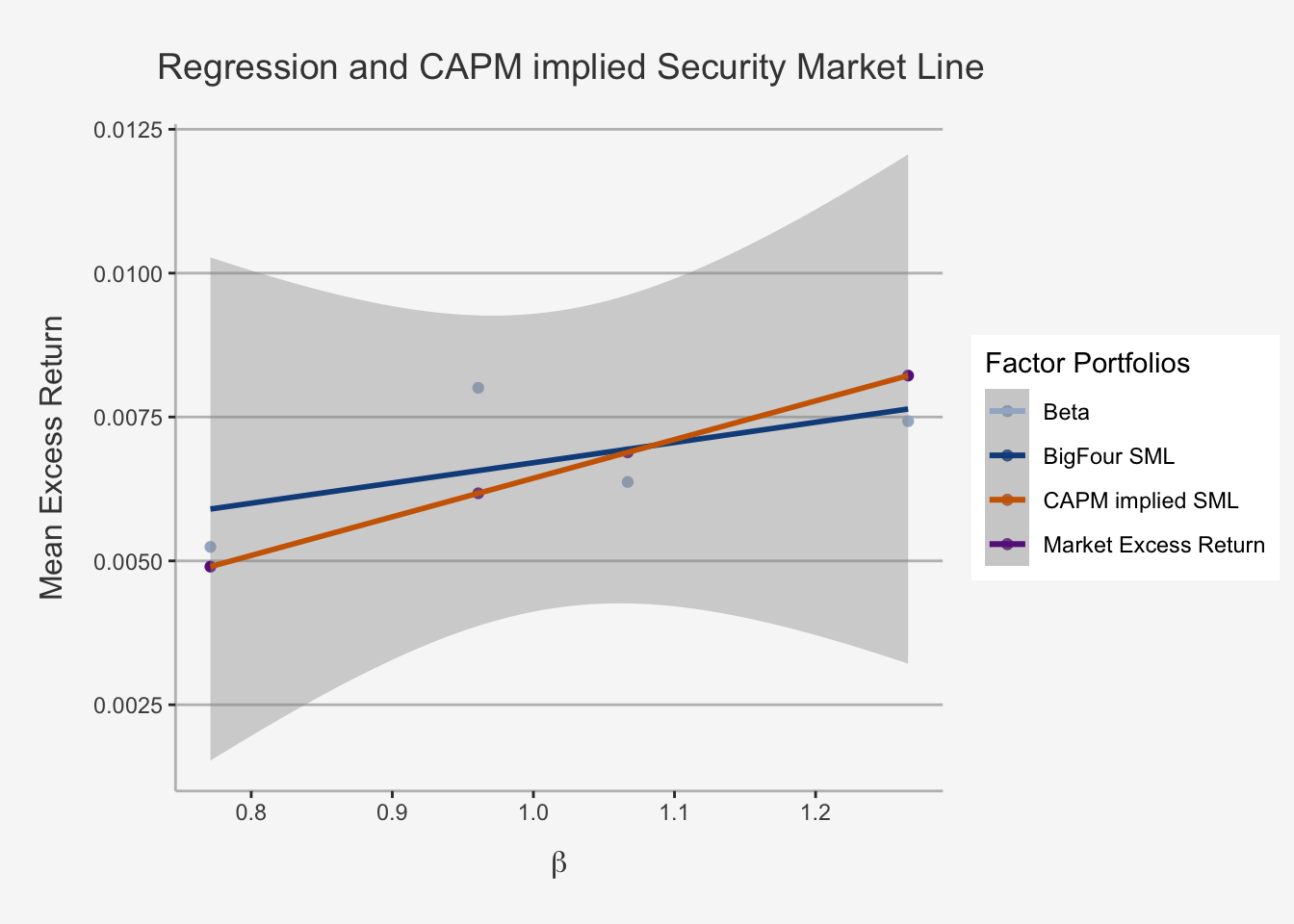

5.4.3.2 Determining the Efficient Frontier

Although we now have a way to compare and compute the efficient portfolio for any given level of return (e.g. the risk minimising portfolio at each expected return level), we may find it still cumbersome to actually compute the entire efficient frontier. This is because it may simply be unfeasible to calculate the appropriate minimum level of risk for each potential return level, as it would require us to perform potentially hundreds of thousand of individual calculations that, aggregated, would form the Markowitz bullet of efficient and feasible portfolios we require.